In the first week of May, Tori and I completed our work on the 6 Week Prototype for the Ruby Bridges Project. It was presented, and then folded into a much larger presentation about our progress throughout the first year of our MFA program. As classes are starting back up, I wanted to make a post summarizing my journey over last year, the results of Ruby Bridges, and my current starting point.

At the beginning of the year, I focused my efforts on the interactions between game design, education, and virtual reality. For me, this meant a lot of exploration and a technical education in these areas.

My early projects focused on improving my skills in Unity. I worked on team projects for the first time in Computer Game I and obtained a real introduction to game design and game thinking. This also allowed me to develop my own workflow and organization in Unity. While exploring my personal workflow, I was interested in potentially using VR to organize materials and form connections throughout the scope of a project using Google Cardboard. The result was the MindMap project, which was a great introduction to mobile development and Google Cardboard, but provided limited usefulness for my work. It was tested using materials from my Hurricane Preparedness Project, a 10 week prototype developed to provide virtual disaster training for those in areas threatened by hurricanes. This was my first time using Unity for VR, and developing with the HTC Vive. The topics explored, including player awareness in VR, organization of emotional content, and player movement in a game space would eventually become the basis of my work on the Ruby Bridges Project.

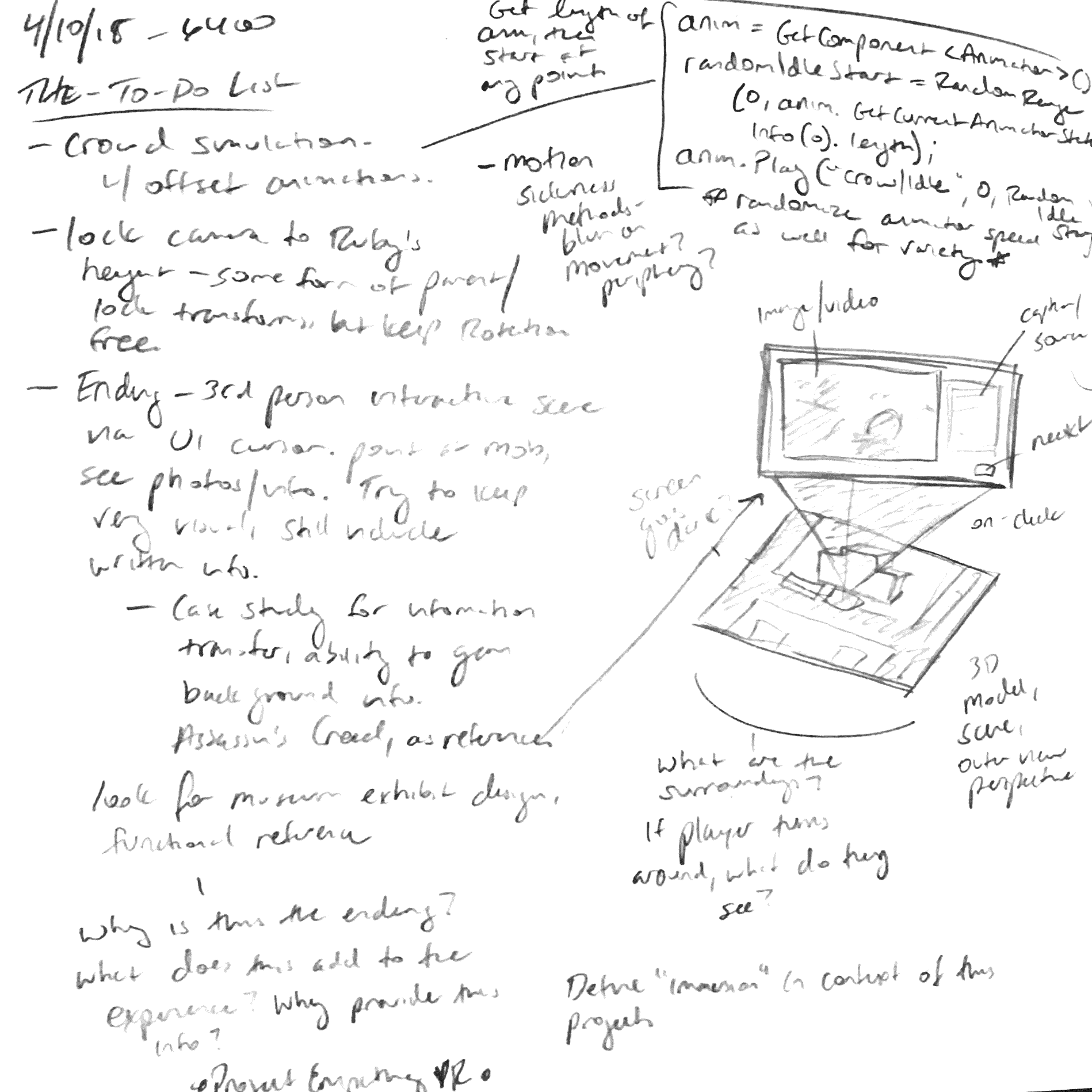

There has been a clear evolution in my own design process and focus, mainly with a shift from visual organization to functional prototyping. Earlier in the year I still had a heavy focus in visual elements and art assets, though with game design projects that experience suffered because the game was not totally functional. By the spring, I had shifted completely into prototyping and non-art assets. All of these projects challenged my process and boosted my technical skills, and then I brought these technical developments into a narrative context.

EDUCATIONAL AND EMOTIONAL STORYTELLING THROUGH IMMERSIVE DIGITAL APPLICATIONS

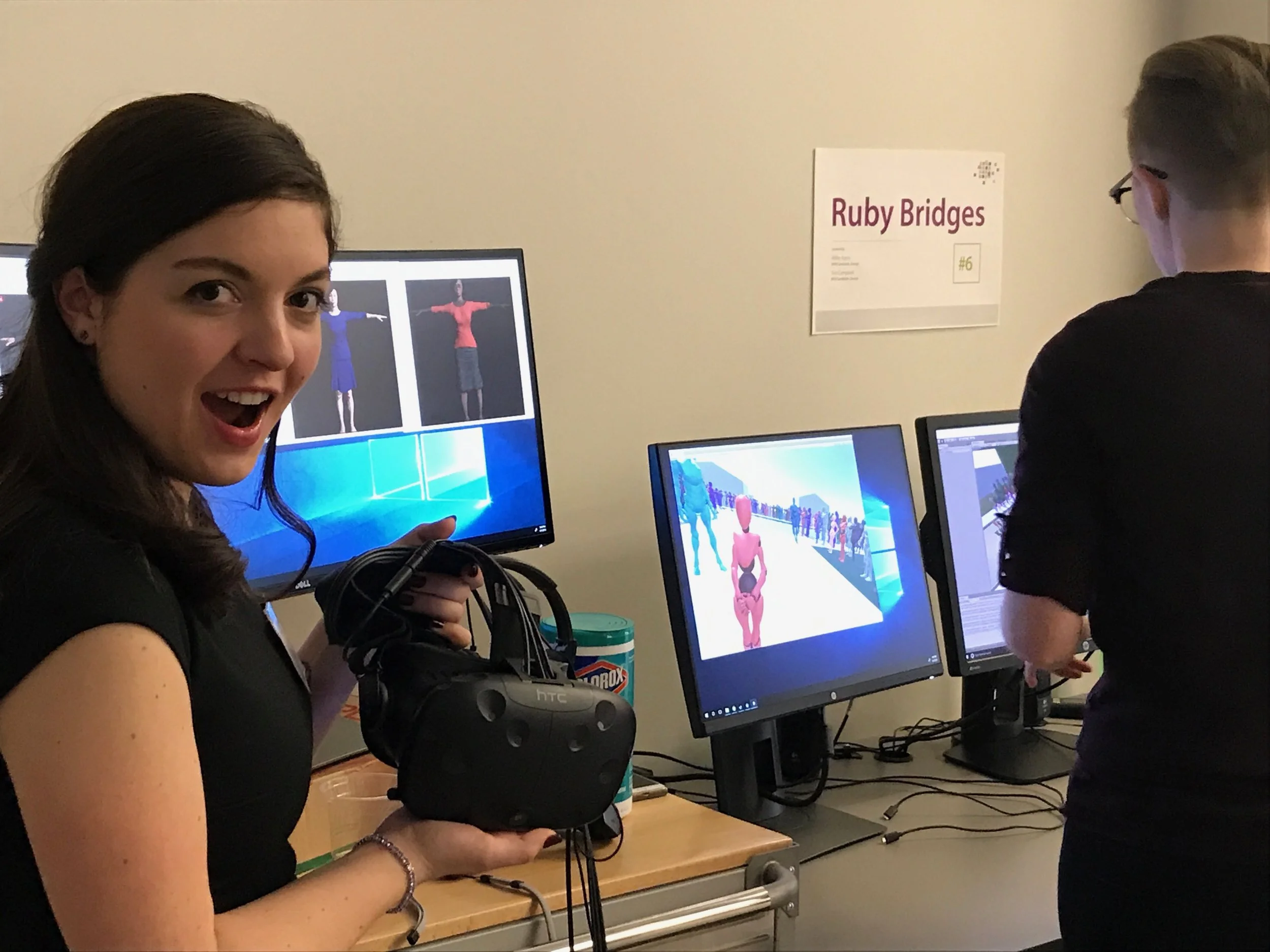

In the Spring, Tori Campbell and I began working on our concept for the Ruby Bridges Project. Working together, we would like to use motion capture and virtual reality to explore immersive and interactive storytelling. Ultimately, we are examining how these concepts can be used to change audience perception of the narratives and of themselves. Ruby Bridges' experience on her first day of school is the narrative we've chosen to focus on.

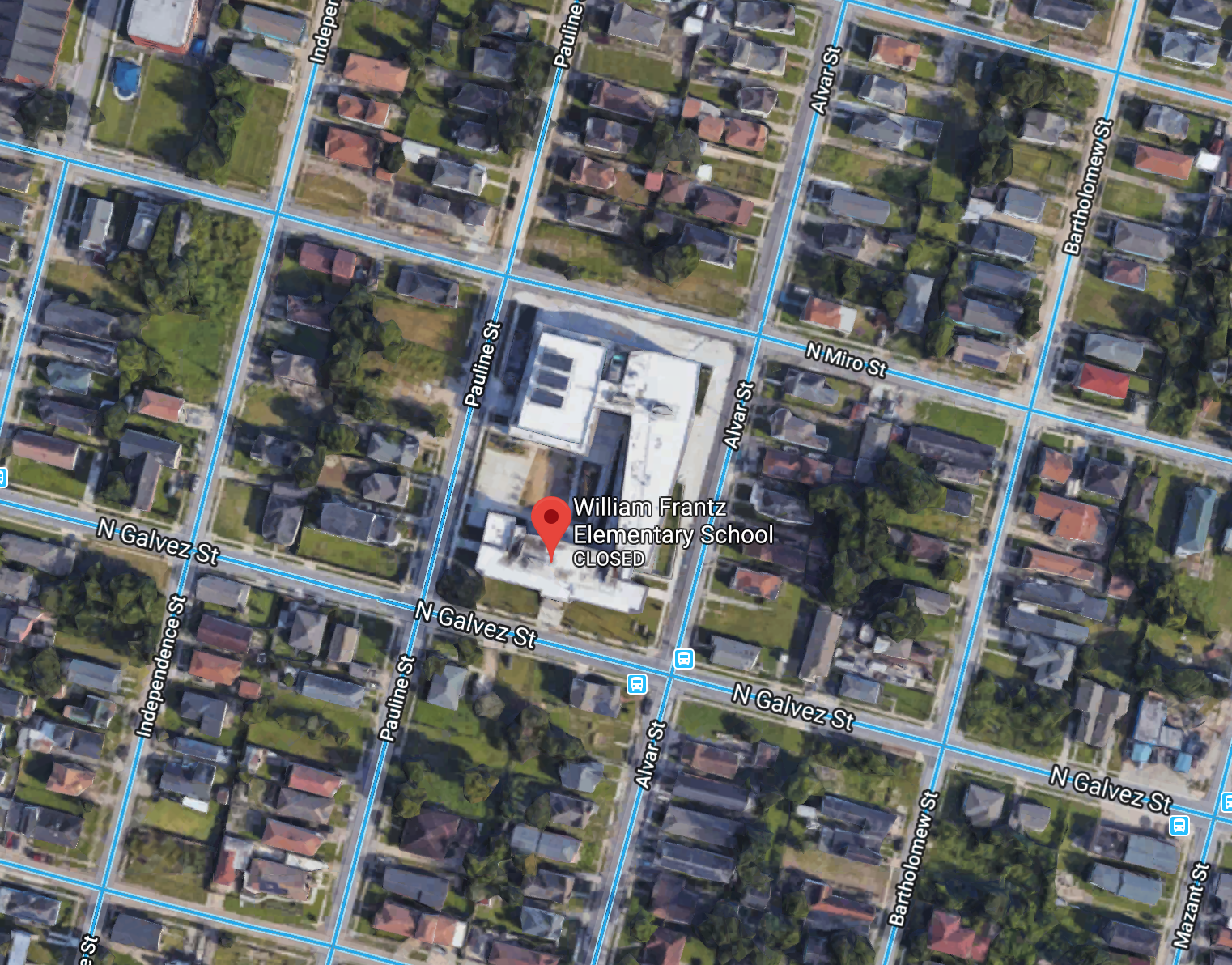

Ruby was one of five African-American girls to be integrated into an all-white school in New Orleans, LA in 1960. She was the only one of those girls to attend William Frantz Elementary School at 6 years old, told only that she would be attending a new school and to behave herself. That morning, four U.S. Federal Marshals escorted her to her new school. Mobs surrounded the front of the school and the sidewalks, protesting the desegregation of schools by shouting at Ruby, threatening her, and showing black baby dolls in coffins.

This scene outside the front of the school became our prototype in VR.

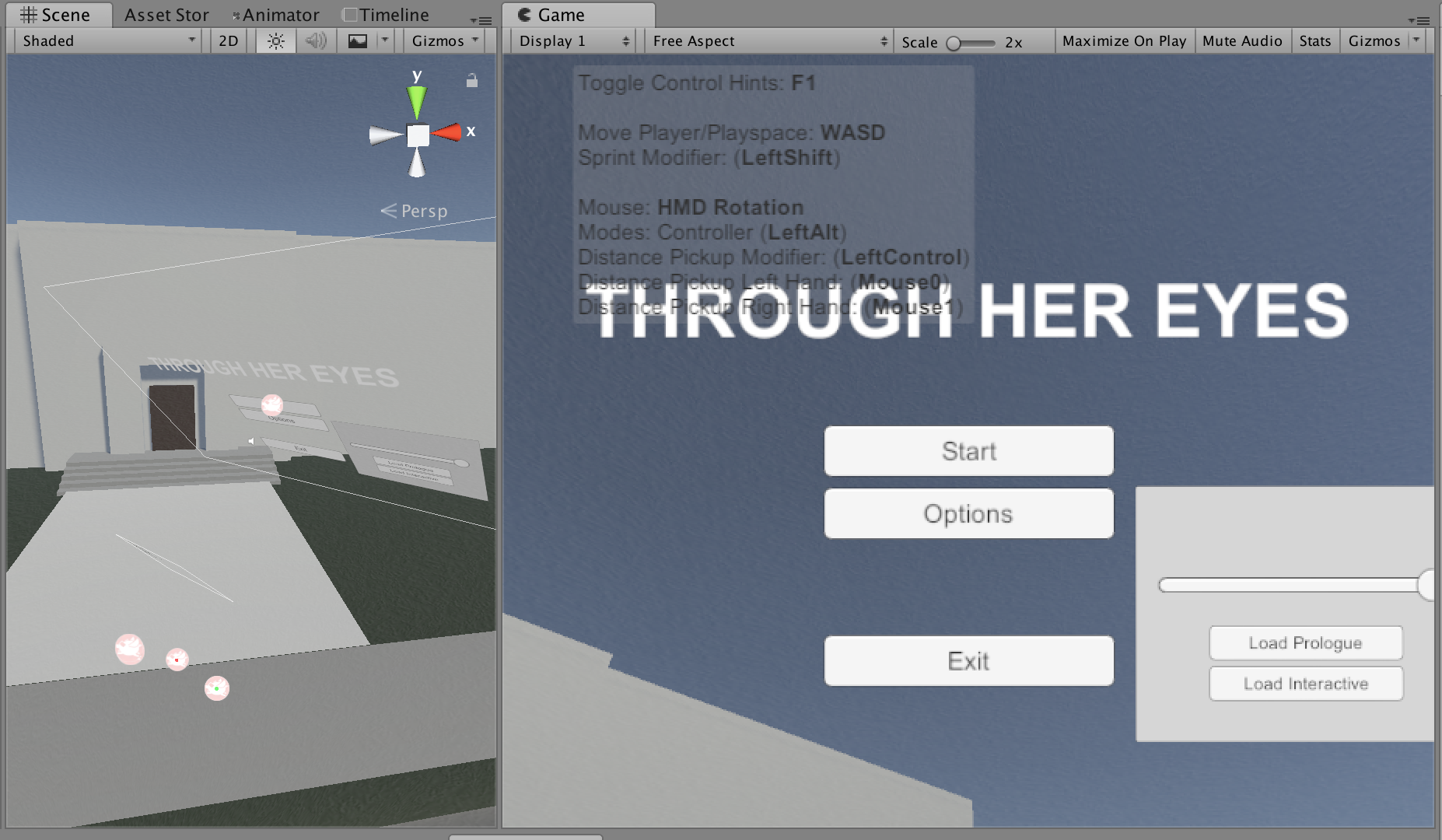

The Four Week Prototype focused on developing technical skills that we would need moving forward, specifically navigation, menu/UI, and animation controls. In doing so, I learned not just how to make these functions work, but the pros and cons of each. This allowed me to make more educated decisions in the design of our Six Week Prototype. We gathered motion capture data from actors to work with the data in a VR space, and to help experiment with controlling the animations.

My goal with the Six Week Prototype was to create a fully functional framework for the experience, something with a beginning, middle, and end. I created a main menu, narrative transition into a Prologue scene, the actual Prologue scene where the user is Ruby's avatar seeing from her perspective, and then an interactive scene where the user can examine the environment from a third person view. This view would provide background information/historical context, and drop into the scene from another perspective. Where the broad goals of the Four Week Prototype was technical development, this project was examining different levels of user control, the effects of this on the experience of the scene, and how to create an experience that flows from scene to scene smoothly even with these different levels of control.

This prototype became a great first step into a much larger project. We learned a lot about creating narrative in VR, and though demonstrations with an Open House audience we discovered just how much impact a simple scene with basic elements can have on the viewer.

THEORY

Broadly, my thread going into the year was how virtual reality can be combined with game design for educational purposes. Through these experiences, I was able to refine that to how immersion and environmental interaction along with game design can be used to form an educational narrative experience.

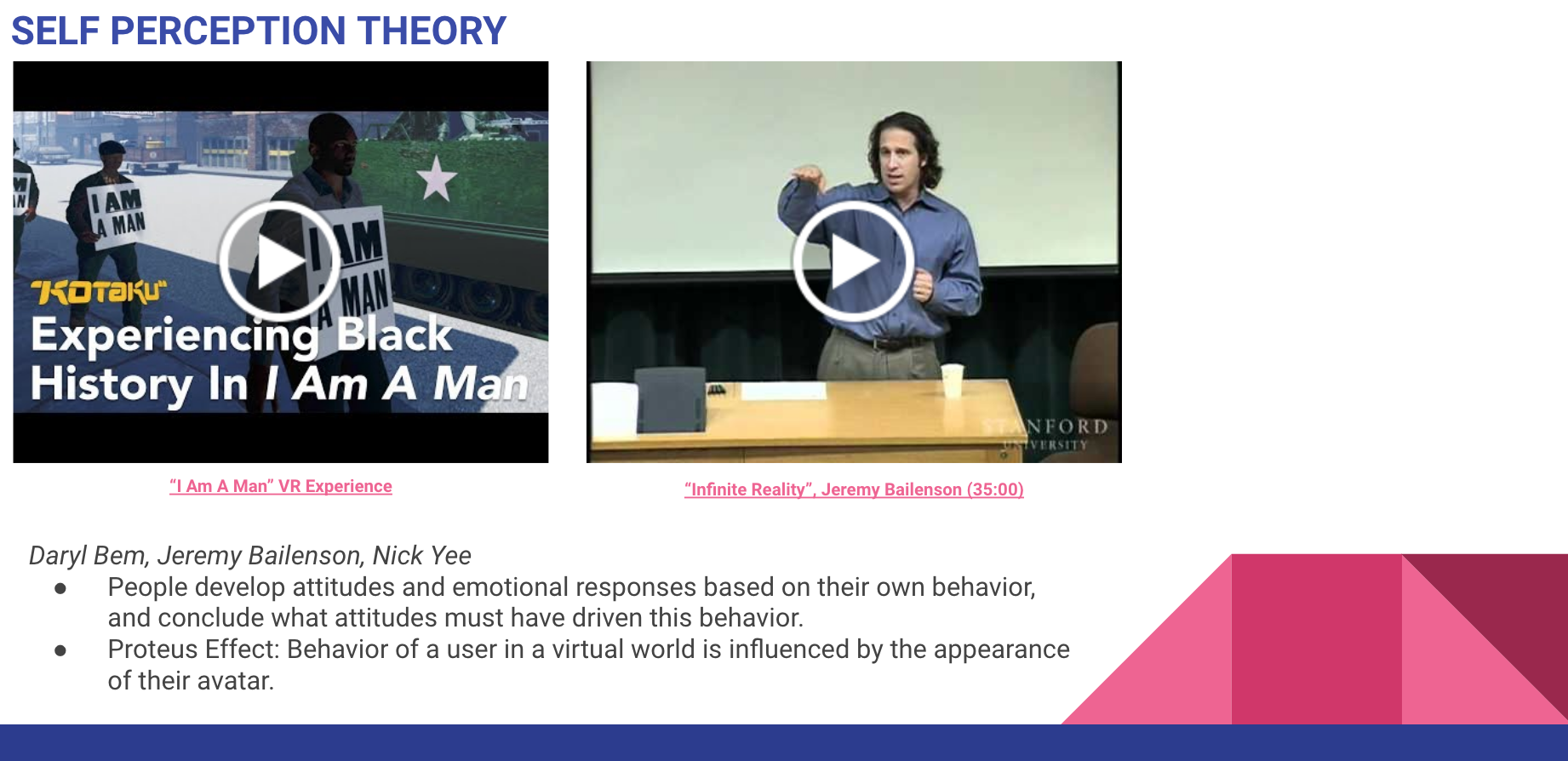

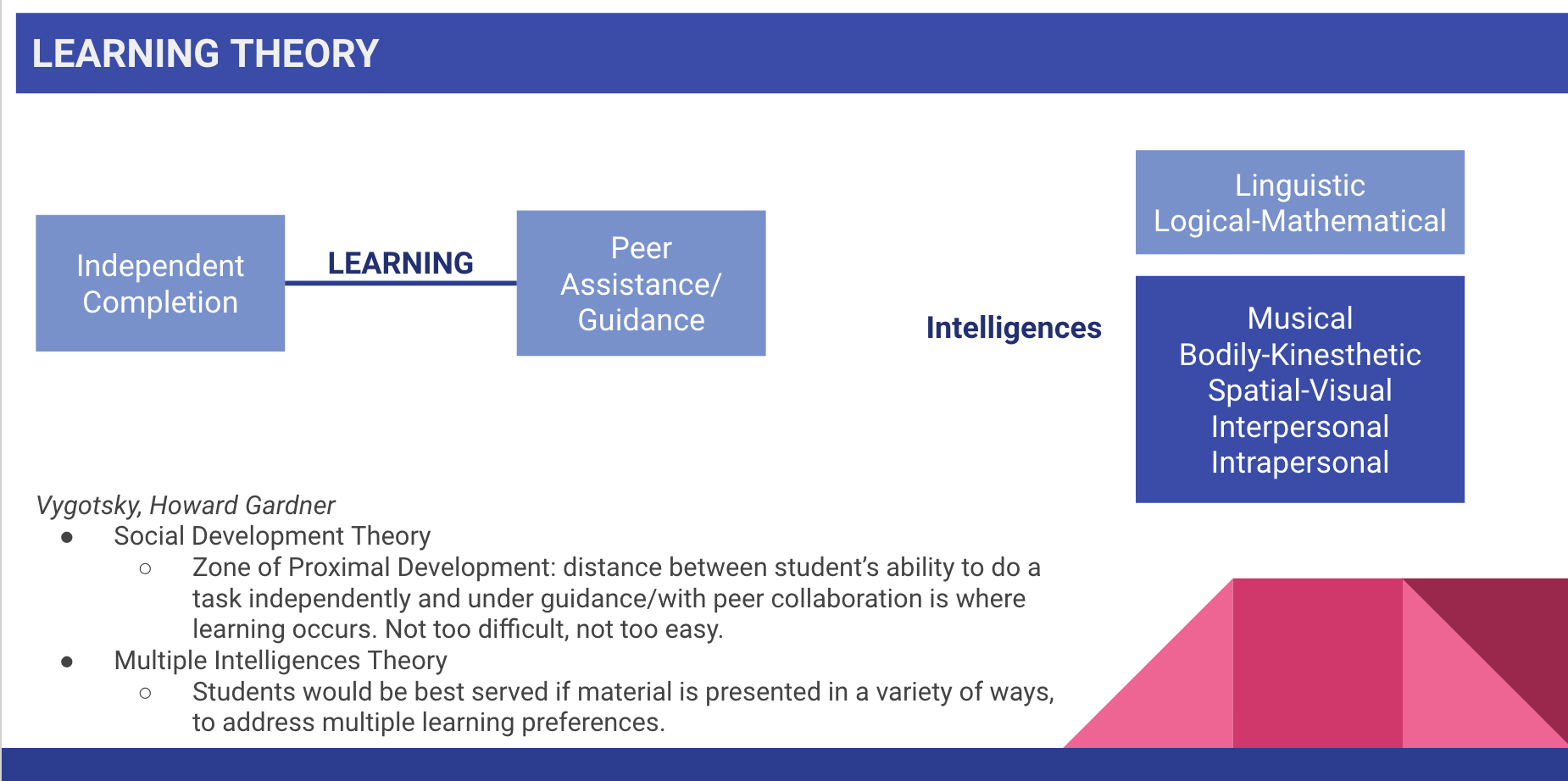

Tori and I are focusing on different but connected elements while working on this project. I am working specifically with theories concerning self-perception, learning, and gamification. Structuring these together, I form a framework for my research. Self-perception theory is connected through the concept of perspective-taking, representing the user and how they reflect back on themselves and their experiences. Gamification represents the interaction the user has in their environment- provides the virtual framework for the experience using game design concepts. Learning theory places the whole experience in the context of education and the "big picture".

WHAT'S NEXT?

Over the next year, I will be continuing to work with Tori on the next stages of the Ruby Bridges Project. While we are still currently discussing our next steps, I would like to explore move environment building and structures of the experience. The Six Week Prototype was a great learning experience for how to set up a narrative flow and work through different levels of interactivity/user experience. But there are still so many other directions to push forward with it. Having the crowd react back to Ruby by throwing objects, yelling specifically at her, or even having all of their eyes constantly gazing down at her, further increasing the menacing presence. Playing with perspective-taking so users can switch back and forth between different members of a scene and determining if that ability contributes positively to the scene. Pushing other concepts of gamification, such as giving users a task while they are in there to highlight aspects of the environment (the closeness of the crowd, the size of Ruby, etc). Manipulating these environmental aspects will likely be the next step for me.

I will continue to research the theoretical framework highlighted above and will likely be making modifications as I start to delve more into these topics. My classes begin next week, and as part of that I will be taking Psychobiology of Learning and Memory- this will likely have an impact on the theoretical framework, but I'm very excited to take what we learn in there and potentially apply it to the experiences.

On the technical side, I will be conducting small-scale rapid prototypes to test these concepts as main development on Ruby Bridges continues. Furthermore, I would like to experiment with mobile development on the side to see if a similar experience to our prototype could be offered with various mobile technologies, such as Google Cardboard or GearVR, perhaps even the Oculus Go.

For now, I'll be organizing my research and getting ready to hit the ground running.