Year 2 is now off and running!

Most of my energy over the past three weeks has been focused on the first project of the year: a five week team effort for 6400. The same project that produced the MoCap Music Video last year.

Concept

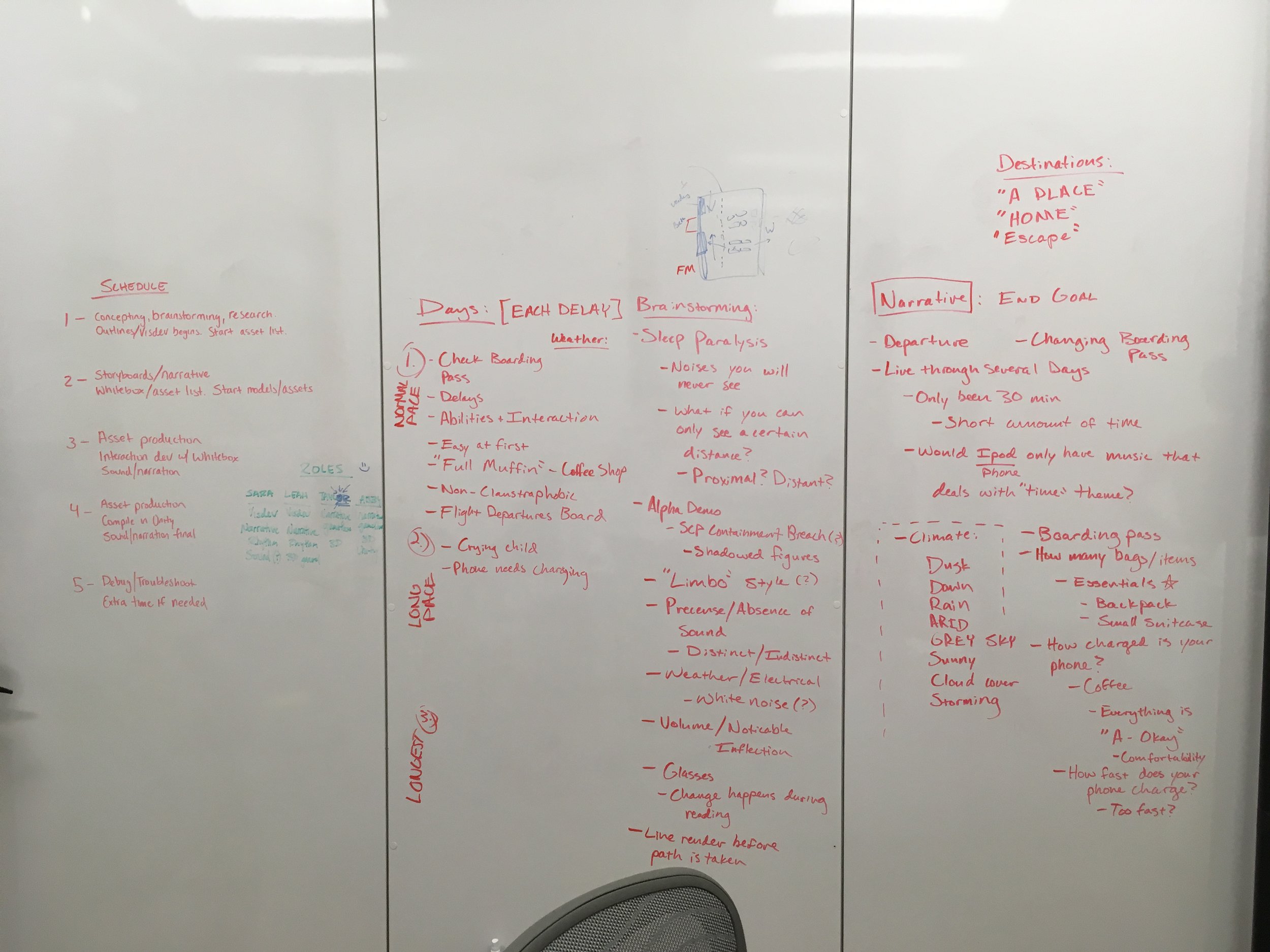

Our team was told the due date and to make something... very open for interpretation. My team includes two 2nd year DAIM students (Taylor Olsen, Leah Coleman) and one first year student (Sara Caudill). We eventually settled on creating a VR experience based on liminal spaces, specifically taking place in an airport, with the viewer losing time and identity as the experience goes on.

Liminal spaces are typically said to be spaces of transition, or "in-between"- a threshold. Common examples are school hallways on the weekend, elevators, or truck stops. Time can feel distorted, reality a bit altered, and boundaries begin to diminish. They serve as a place of transition- the target is usually before or after them. The sense of prolonged waiting and distortion of reality is what we intend to recreate in this experience. By placing the viewer in the gate of an airport and observing the altered effects around them, such as compressed/expanded time, we will bring the viewer into our own liminal space.

All of our team members had an interest in working with VR and with games, so I looked for environmental examples of what might be considered a liminal space already existing within a game. The Stanley Parable sets the player in an office building by themselves, seemingly at night, which contributes to the odd feeling of the game- you never see another human, and the goal is to escape. The presence of a narrator and instructions (despite the player choosing whether or not to follow it) prevents this from being a true liminal space, but I feel that the setting itself creates a strong nod in that direction.

Silent Hills P.T. is much closer to the feeling we're getting to. The player constantly traverses the same hallway, though with each pass the hallway is slightly altered. There is minimal player identity, the passage of time is uncertain, and the player is constantly in a state of transition looking for the end.

Sightline: The Chair became an important source material for us. Developed early on for the Oculus, the player is seated in a chair and looks around at their environment- one that constantly morphs and shifts around them. The key point is that these changes occur when the player looks away, and then are in place when the player looks back. This is an element I very much want to incorporate into our game. It really messes with the flow of time and creates a surreal feeling. Importantly, the player cannot interact with any of the objects around them- they must simple sit and wait for the changes to occur.

Progress

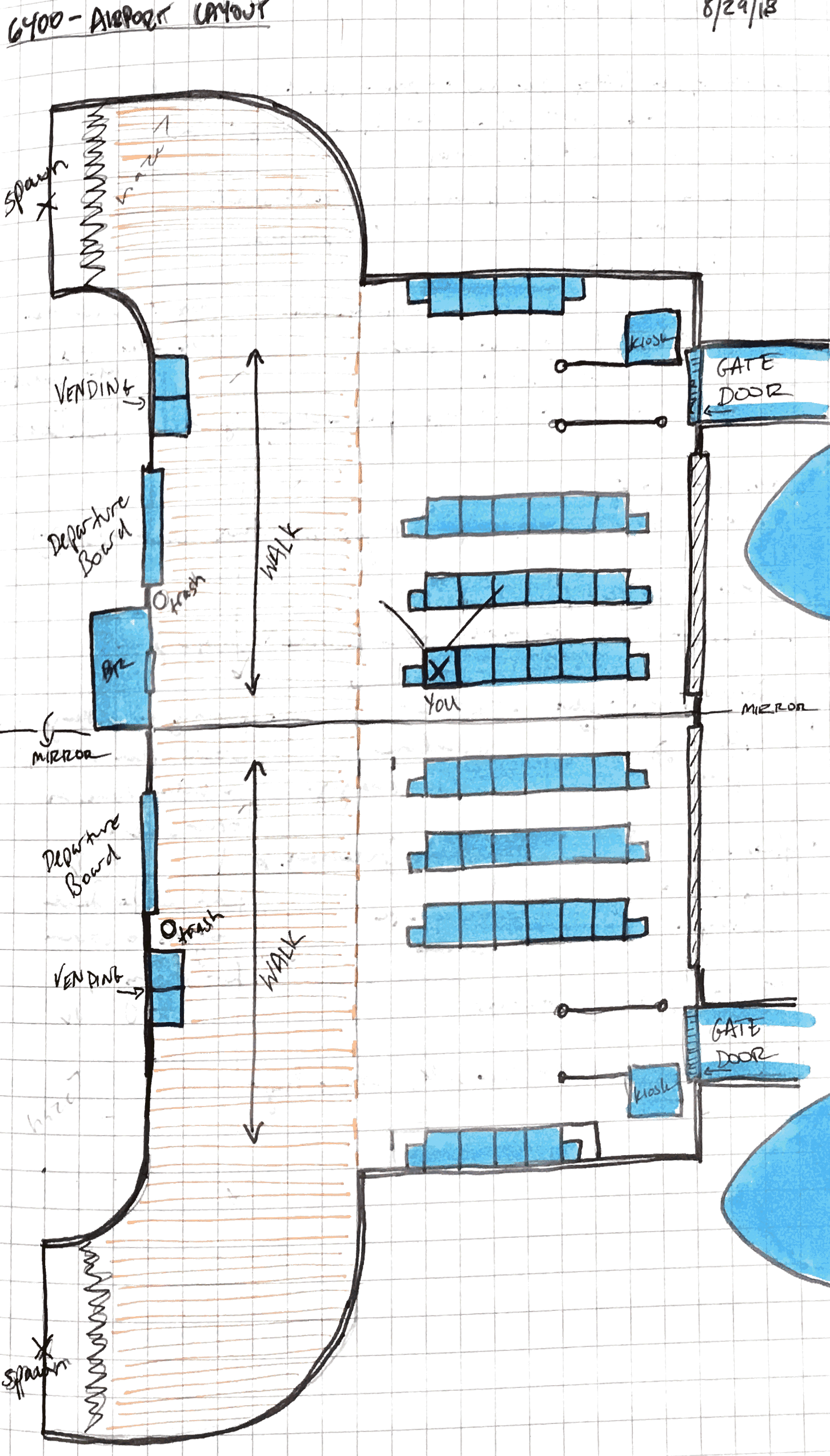

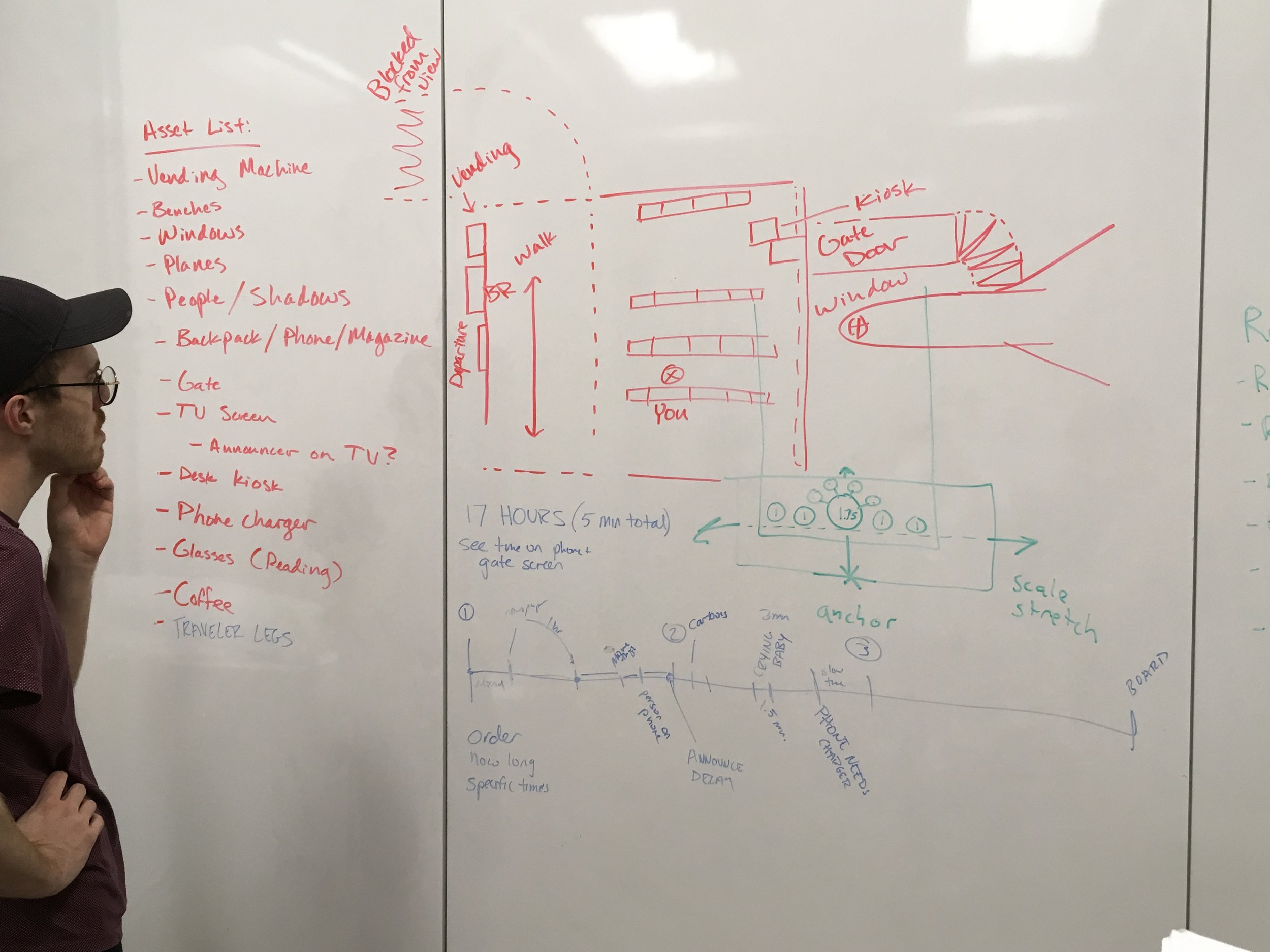

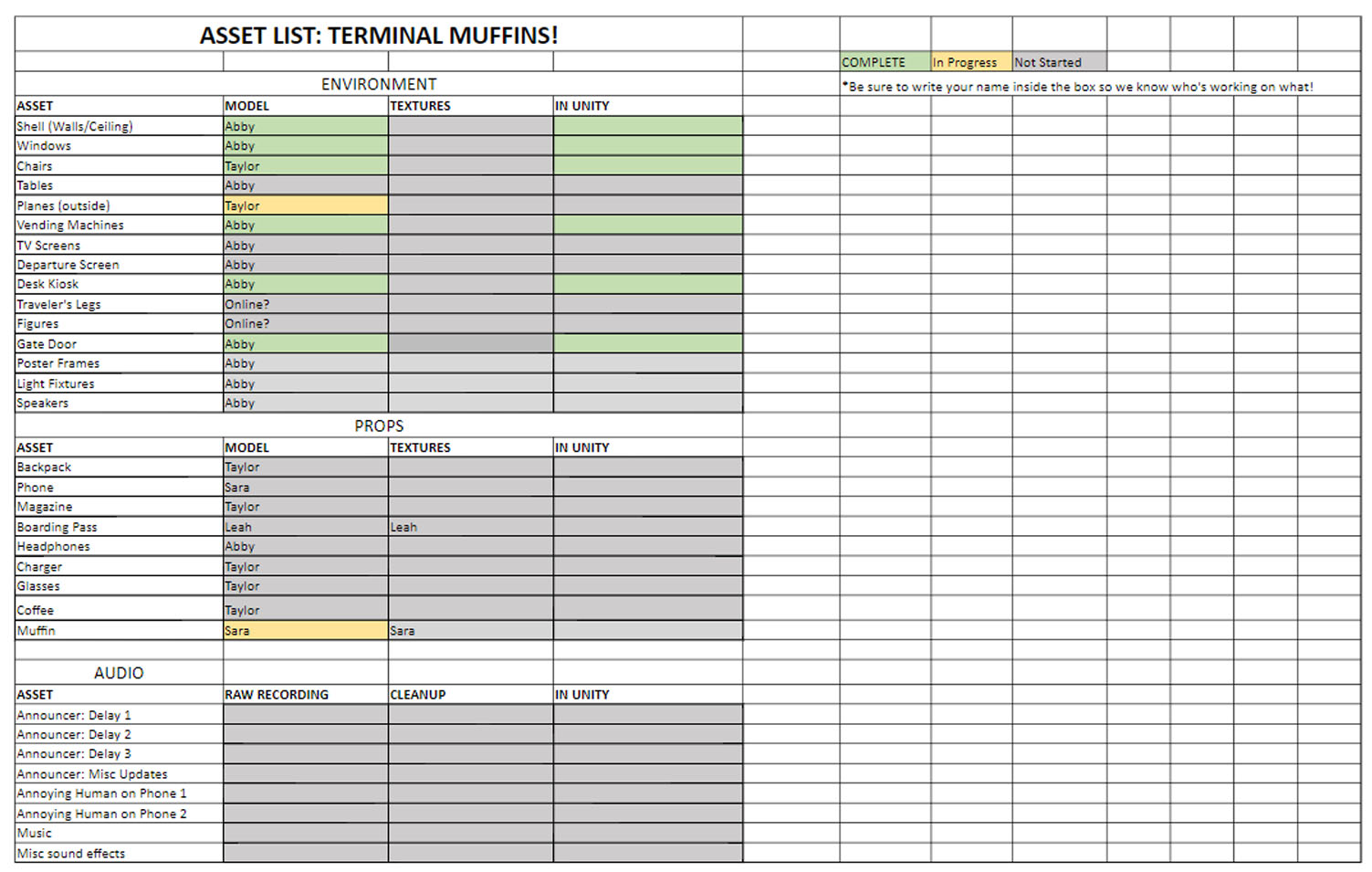

From there, we met as a team and began planning out the experience- interactions, the layout of the airport, how time would pass, what events would be happening. An asset list was formed and placed online, as well as a schedule for development. We wanted to make sure everyone on the team was learning new skills they were interested in, and teaching others the skills that they have. Sara and Leah focused on visual and concept development- the color keys, the rhythm of the experience, etc. Taylor worked on finding reference photos, and began modeling the 3D assets we would need for the airport.

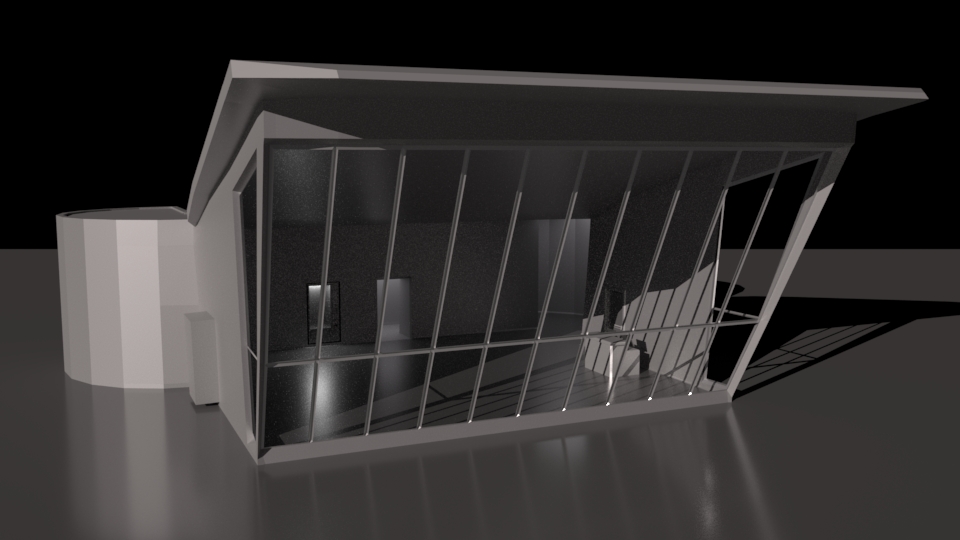

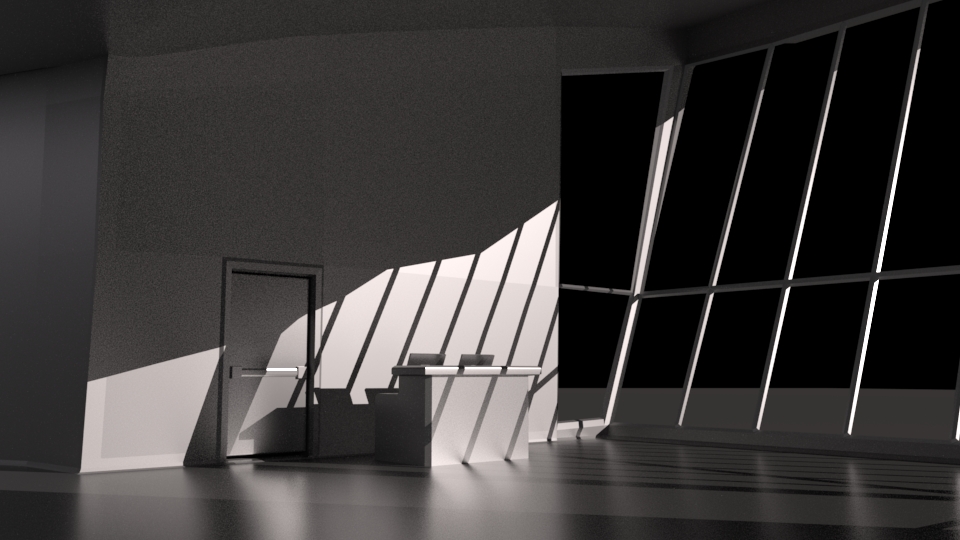

For me, I spent the last few days focused on modeling the airport environment and beginning some of the interaction work in Unity. Based off of the layout we created in the team meeting, I was able to finish the airport shell and start working on some of the other environmental assets- a gate desk, vending machine, gate doors.

I brought those models into Unity to start working on developing some code. Taylor made the chairs for the gate, so I placed those and got a basic setup going.

I began working on some Audio scripts to randomly generate background noise and events- an assistance cart beeping by, a plane landing, announcements being made, and planes taking off/landing. That's about done, and I'll be posting an update video soon with the progress made.

The current problem I'm having is the script to change items when the viewer isn't looking at them. I found GeometryUtility.TestPlanesAABB in the scripting API, which forms planes where the camera's frustrum is and then calculates if an object's bounding box is between them or colliding with them. Is the object where the player can see it? I can successfully determine that an object is present, but when deactivated to switch to another GameObject, the first object is still detected and causes issues with the script I've written to try and swap it with another. I got it to successfully work with two objects, but three is revealing this issue in full force. I may try instantiating objects next instead of just activating them- either way, this test has allowed me to learn a lot about how Unity determines what's "visible".

Next?

This weekend, I'll be continuing to work on this sightline script for the camera and hopefully finding a solution. I also have several other environmental assets to model, and will begin textures for the ones that I already have completed. On Sunday I plan on posting a progress video of the application as is. We still haven't decided whether to use the Vive or attempt mobile VR, something that I've been especially interested in. Alan suggested letting the project develop organically and then make a decision near the end- I'm leaning towards the Vive for this currently for the familiarity and extra power, but on mobile the player is forced to be stationary and lacks control. More thoughts on that soon.