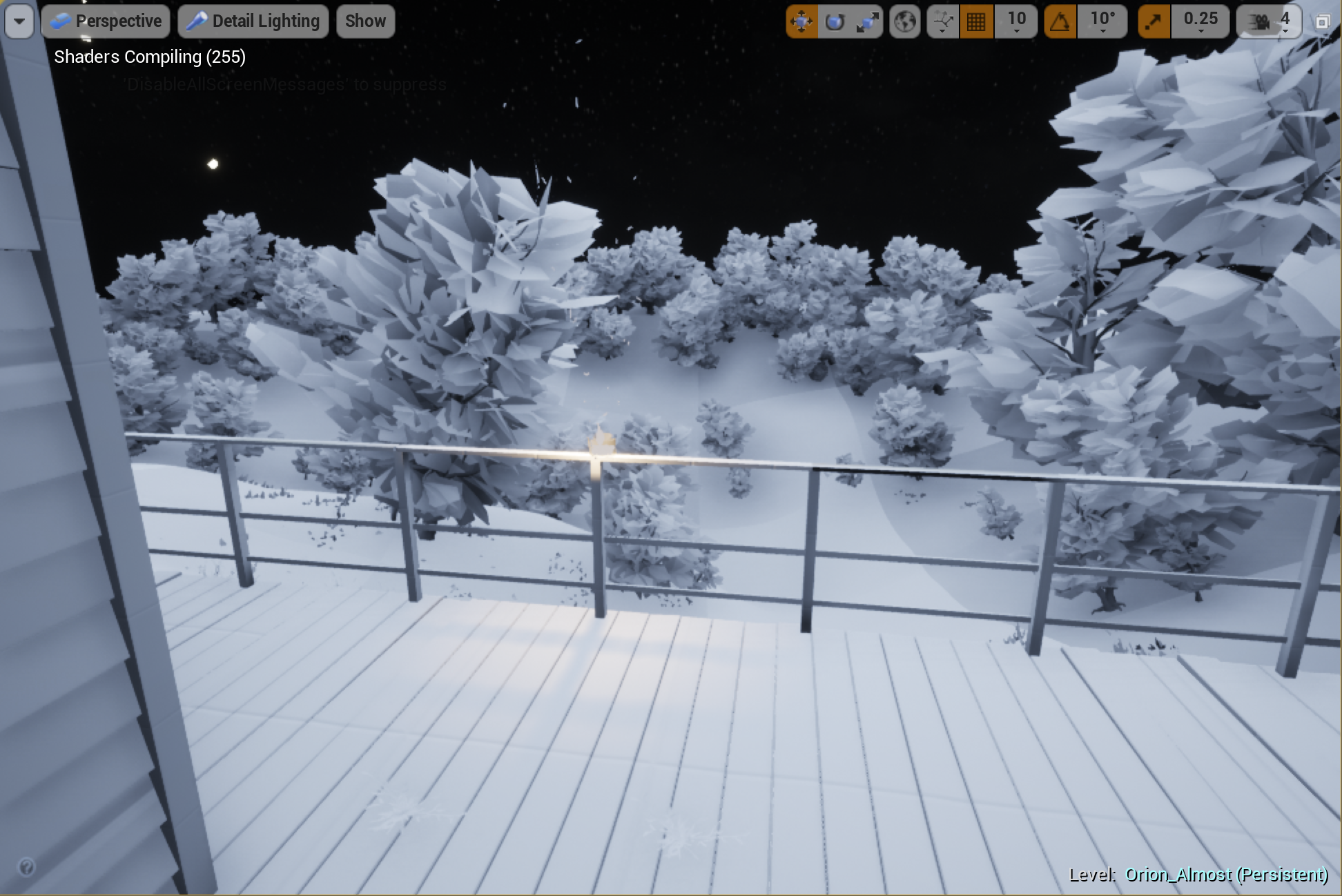

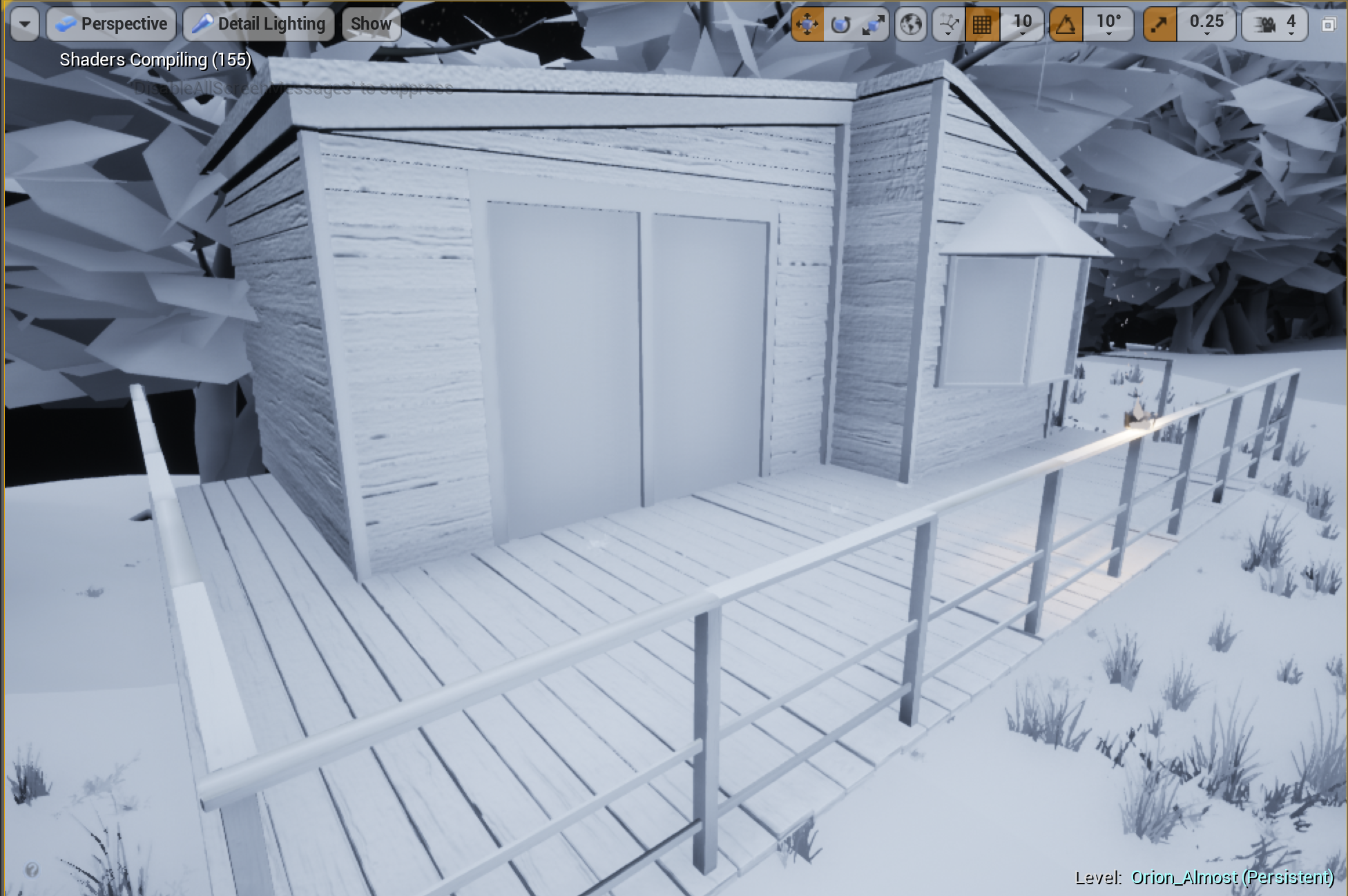

Projects like Orion and spending time on other VR applications has been a welcome break for exploration, but this week brings the return of thesis. We’re working on projects in phases, with Phase 1 lasting for the next five weeks.

I’ve been thinking about our prototype of Scene 1 (Ten Week Prototype) from the Ruby Bridges case study last semester. The final result was not a functional experience technically or visually, and after speaking with peers and receiving feedback I realized that I needed to go back to some fundamental concepts to examine some of the decisions made in designing the experience, such as timing, sequencing, motion, and scene composition. I feel that our last project started getting into the production value too soon when we should have been focusing on the bigger questions: how does the user move through the virtual space? How much control do we give them over that movement? What variations in scale and proximity will most contribute to the experience? These are the questions we started with and seemingly lost sight of.

In developing the proposal for my project I also began considering more specifically what I’m going to be writing about in my thesis. And, more importantly, beginning to put language to those thoughts. Recent projects have been allowing me to question what parameters designers operate with when we’re designing for a VR narrative experience. It gets even more complicated when we start breaking down the types of narratives being designed for. In this case, the Ruby Bridges case study is a historical narrative - how would those parameters shift between a historical narrative and a mythological narrative? What questions overlap? Orion was a great project for examining design process for narrative, and now in shifting to another, I’m interested to see how that process carries over here.

Phase 1: Pitch

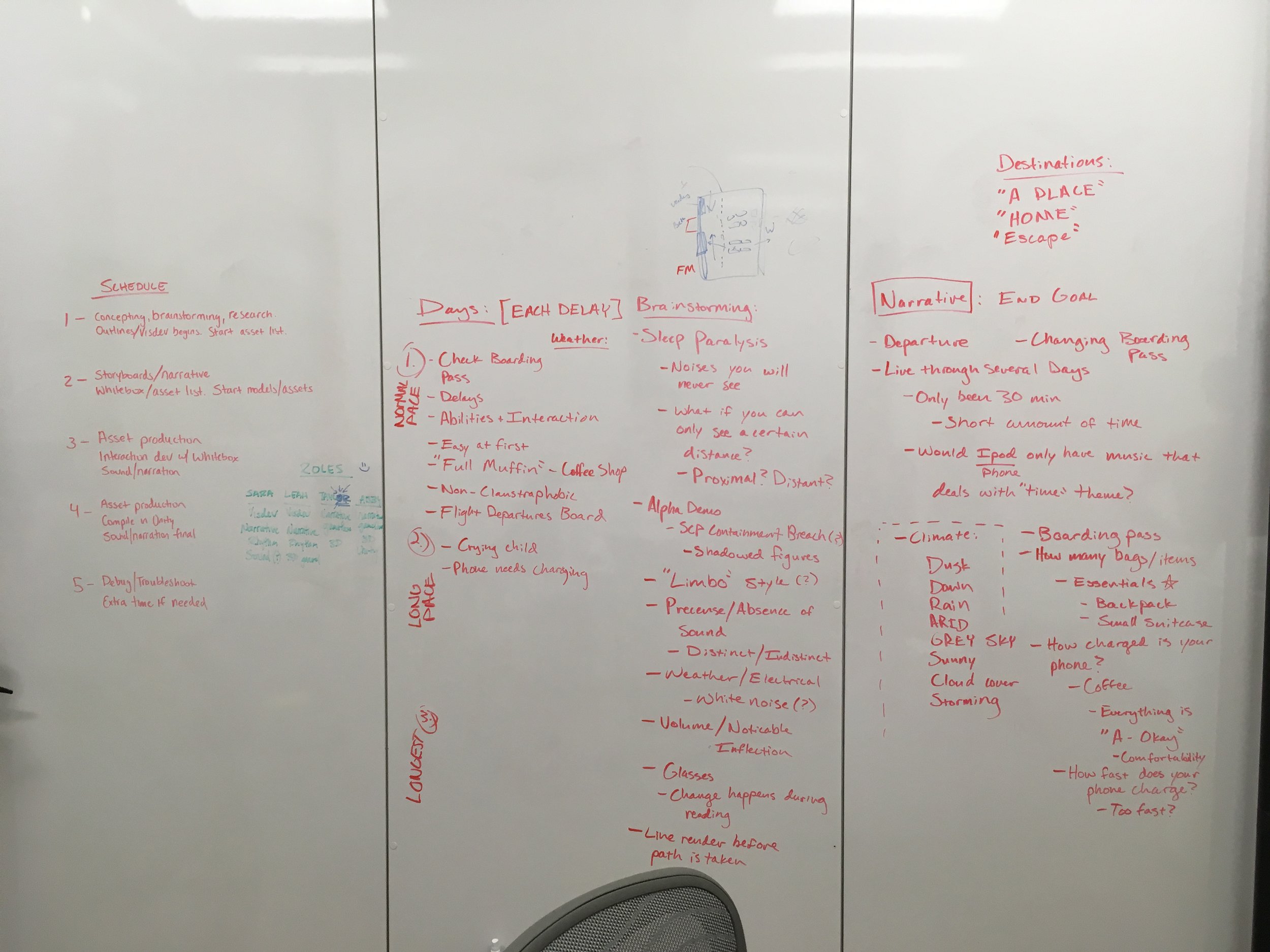

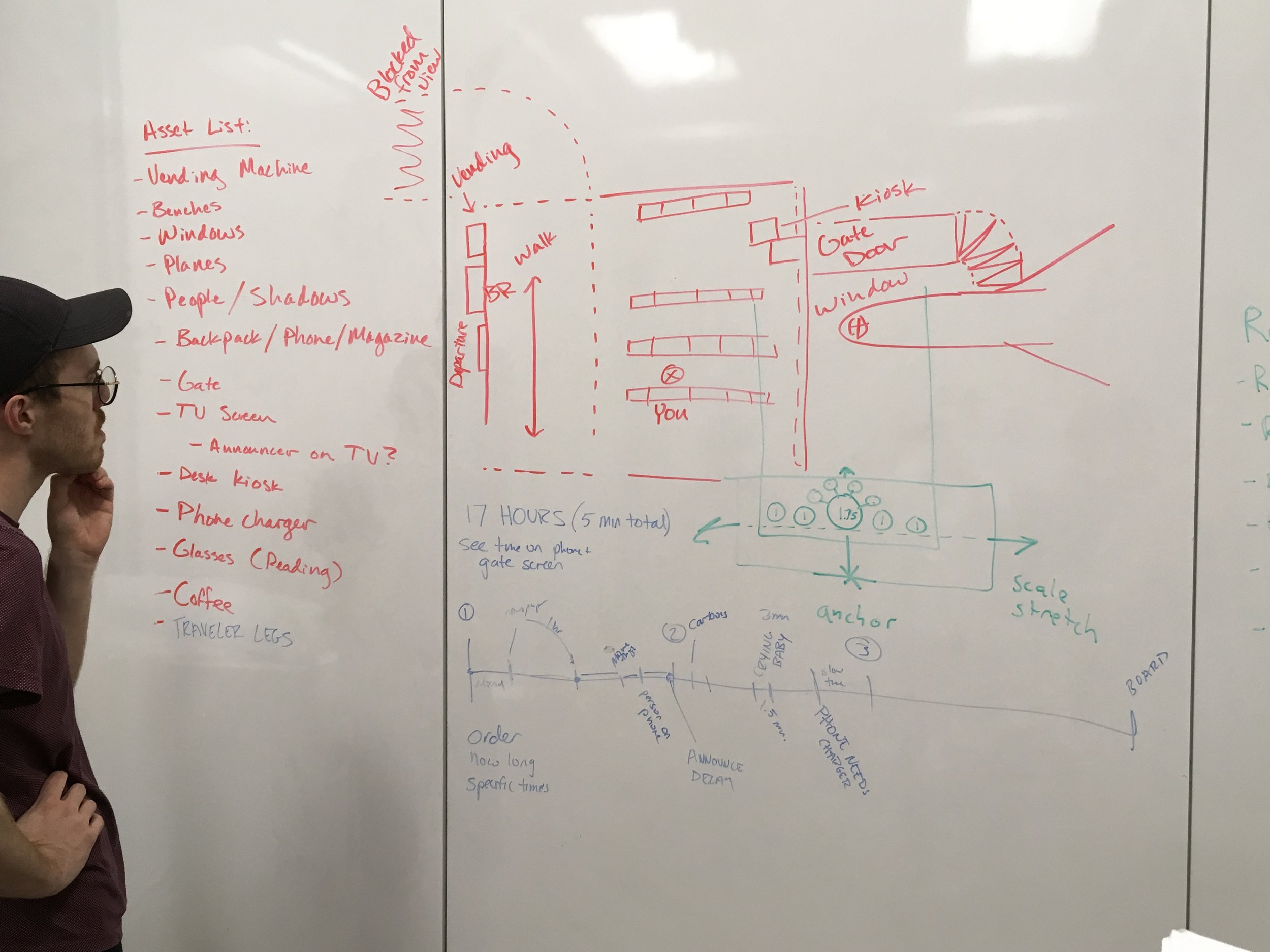

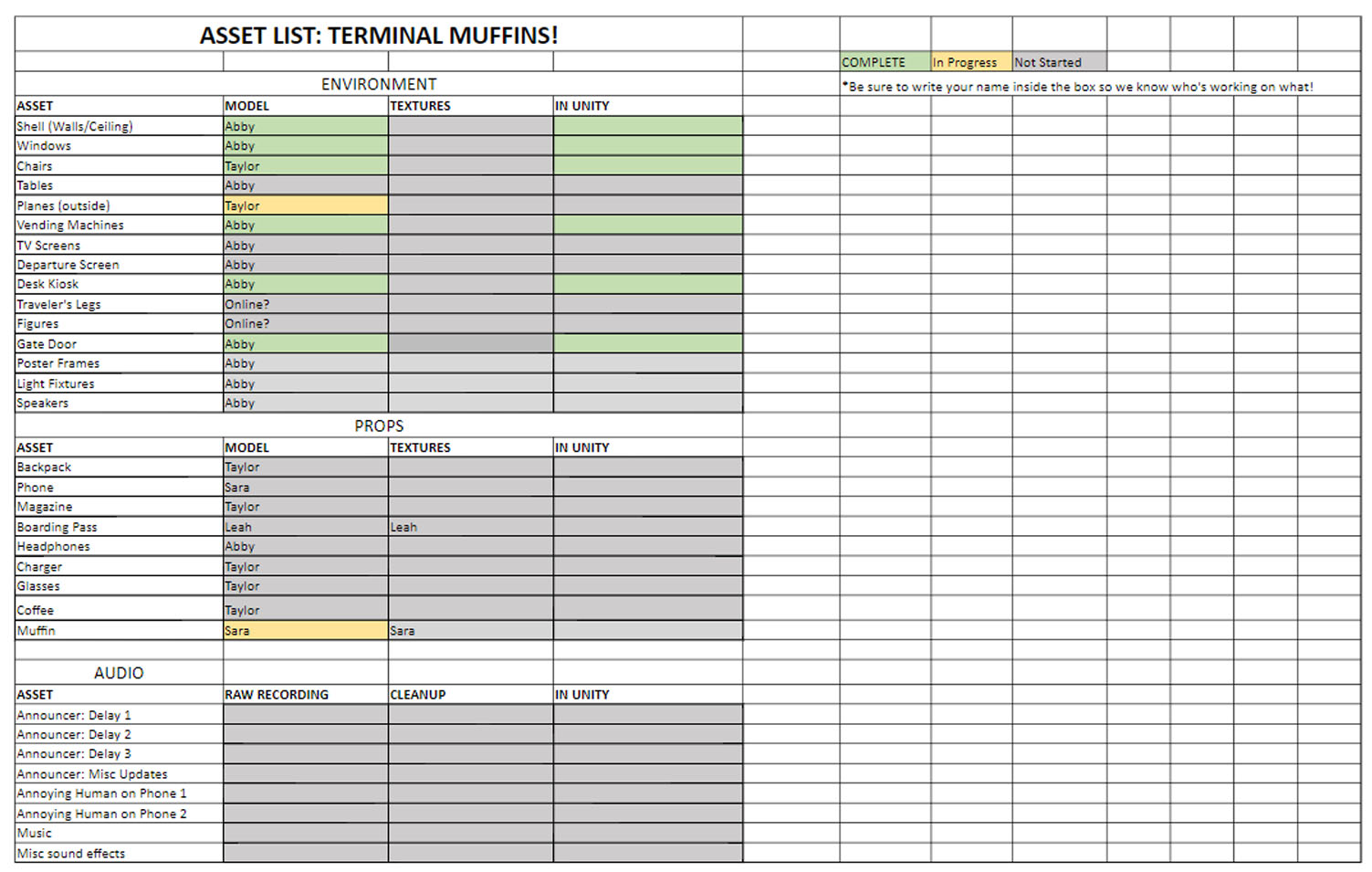

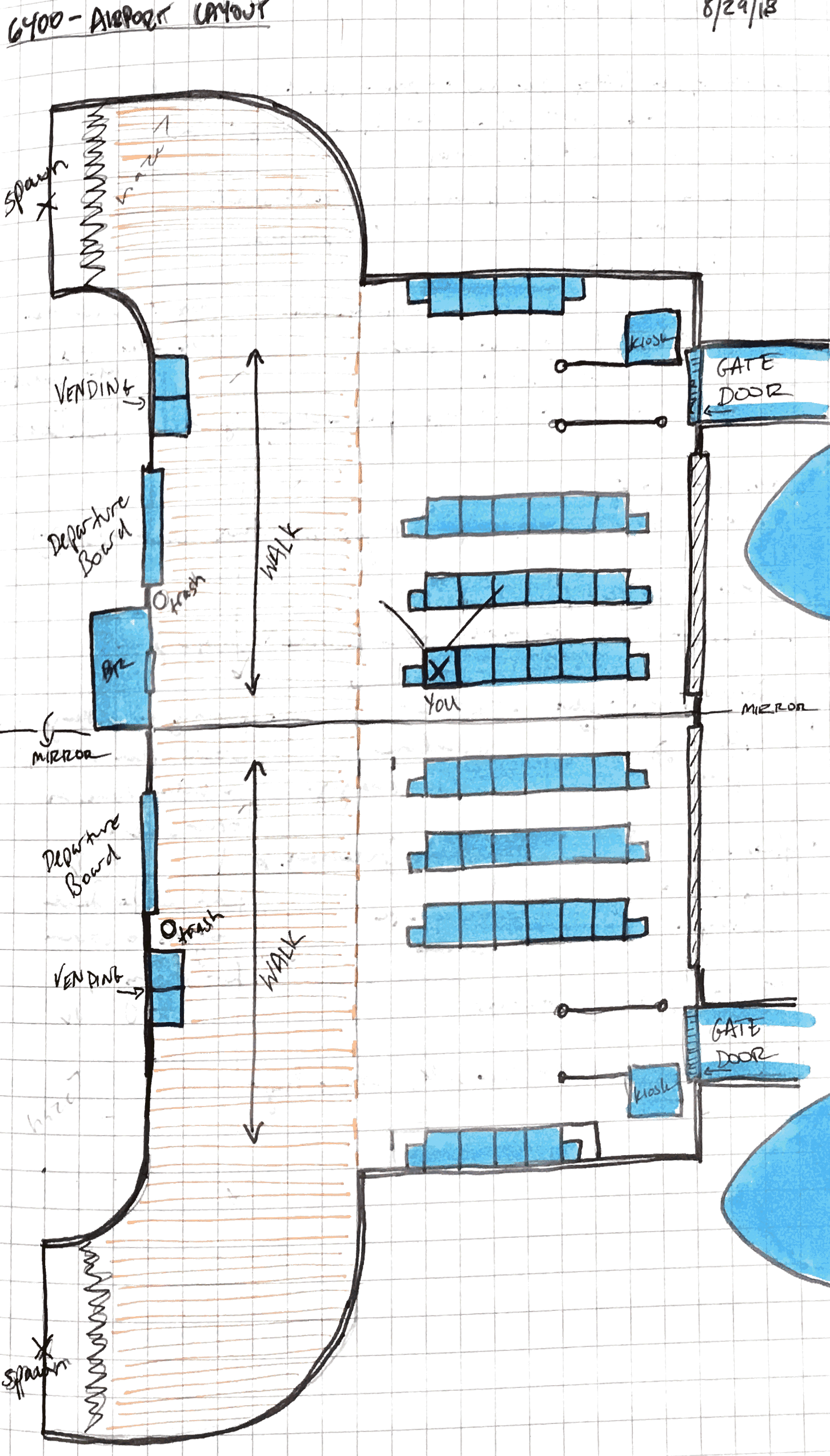

Production Schedule for Phase 1

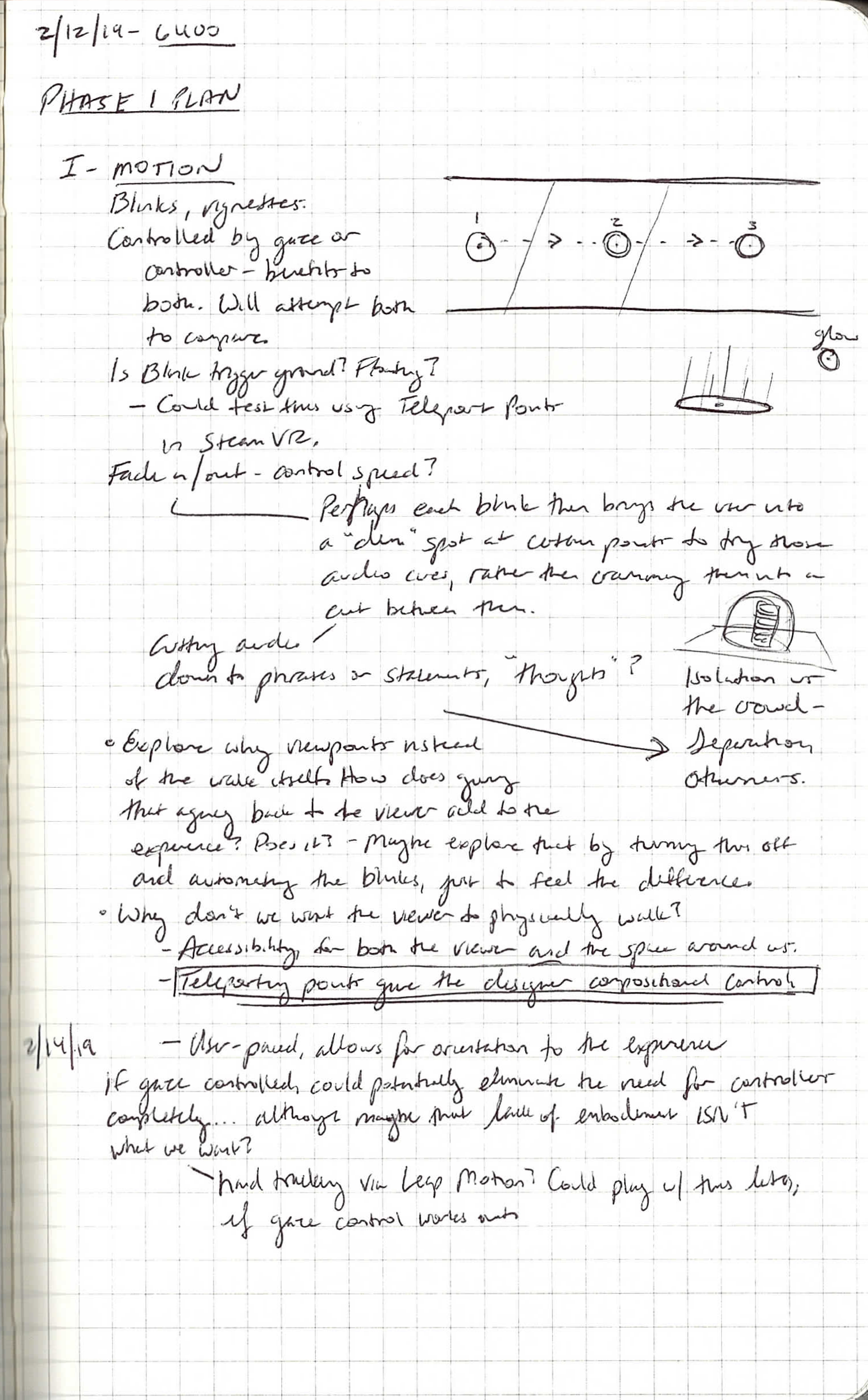

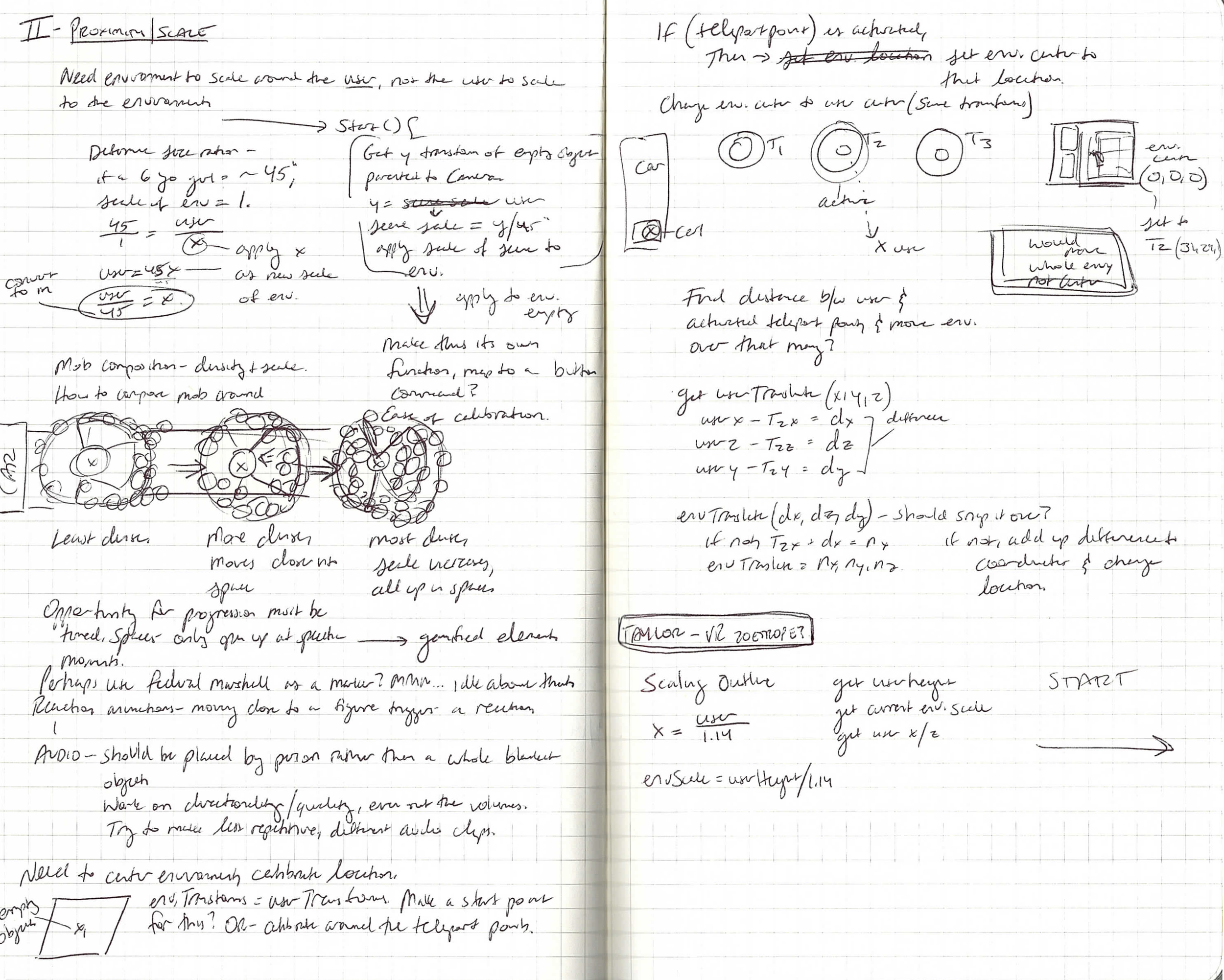

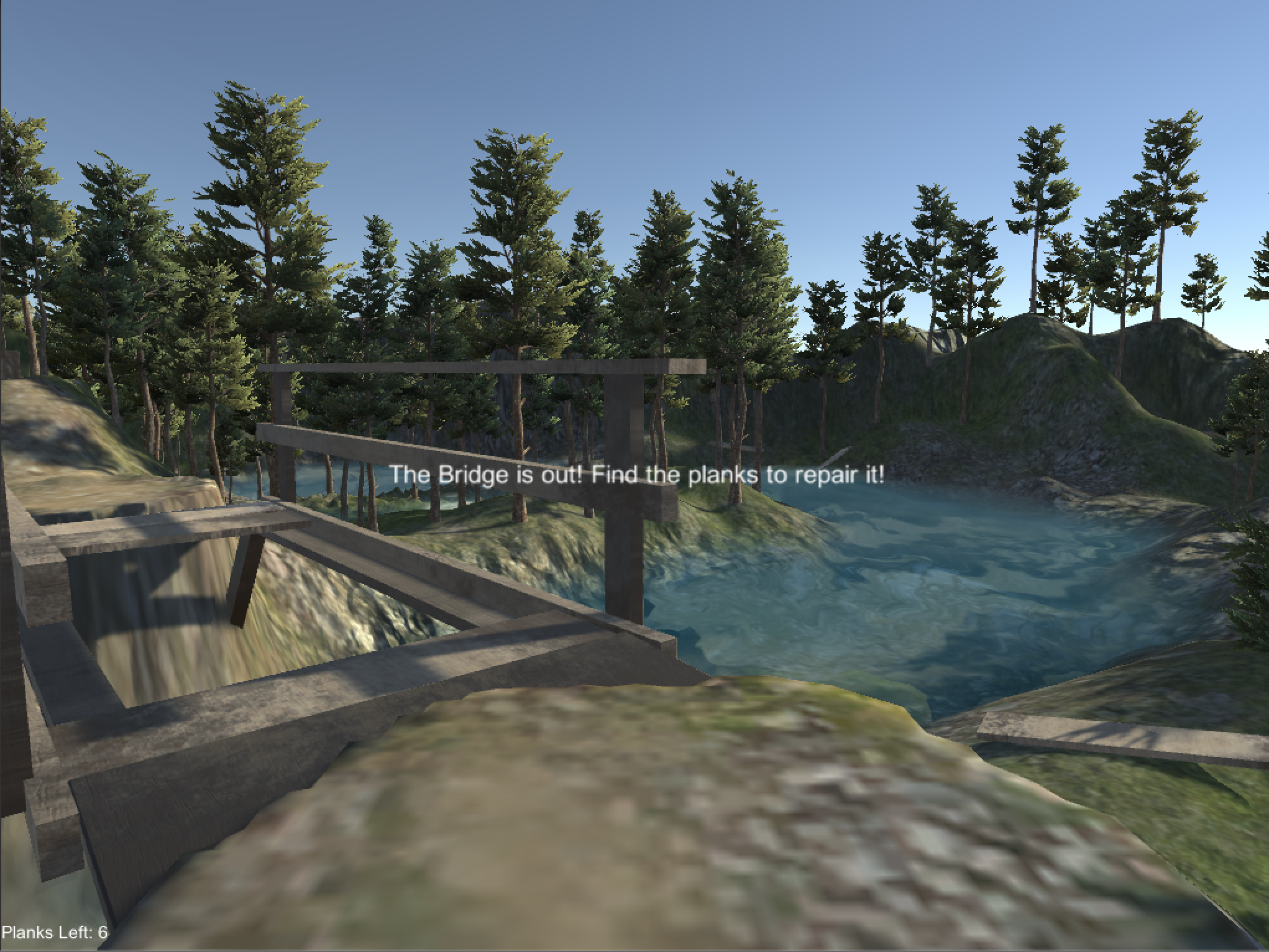

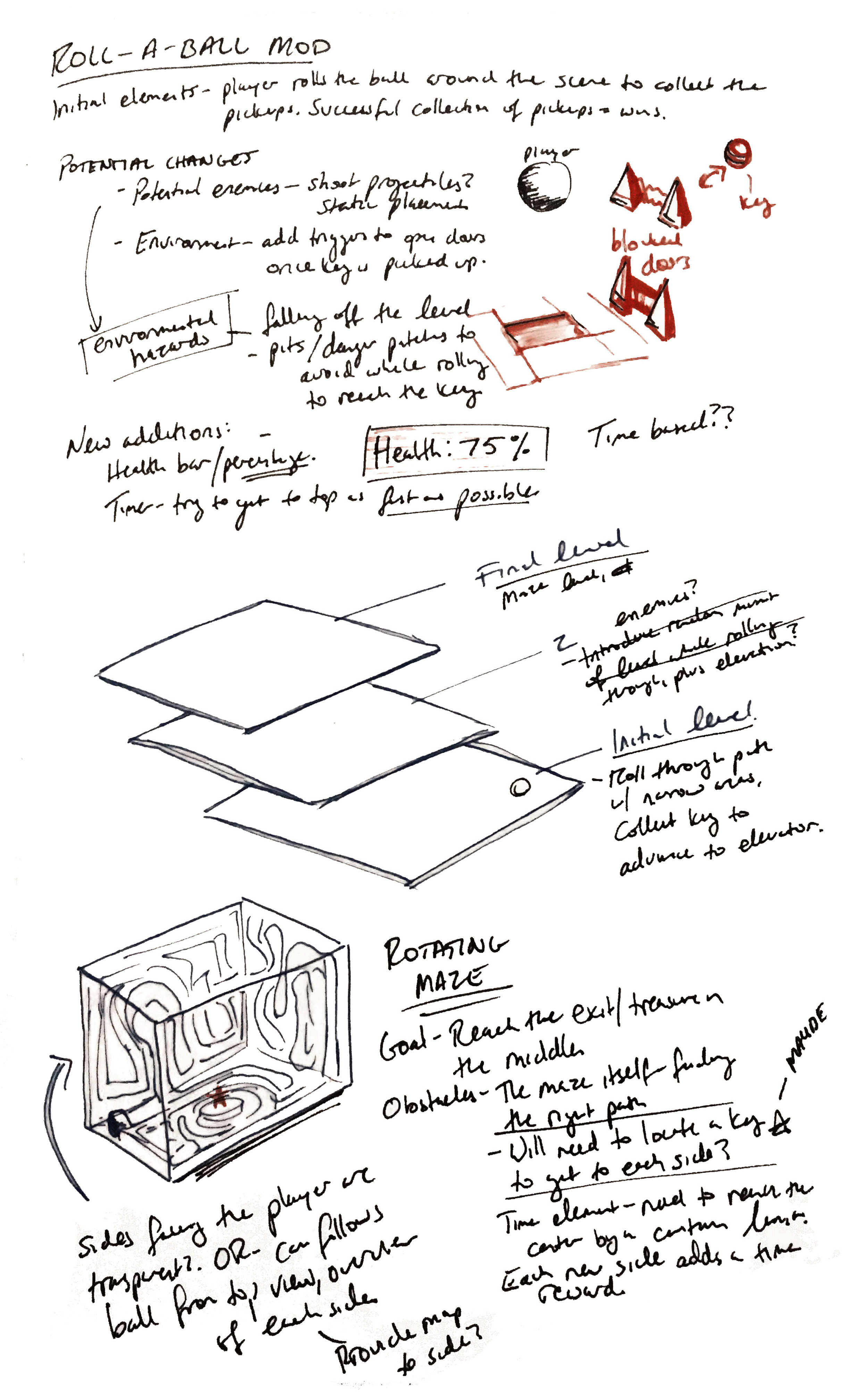

I will be creating two test scenes to address issues face in the 10 Week Prototype. The first will address Motion- how can a user progress through this space in the direction and manner necessary for the narrative while still maintaining interest and time for immersion? And does giving this method of progression to the user benefit the scene more than the designer controlling their motion? In the previous prototype we chose to animate the user’s progression with a specific pace. This time, I will be testing a “blink” style teleporting approach, allowing the user to move between points in the scene. Each of these points creates an opportunity for myself as a designer to have compositional control while still allowing the user control over their pace and time spent in that moment. This also provides an opportunity for gamified elements to be introduced, which is something I will be exploring as I move through the project.

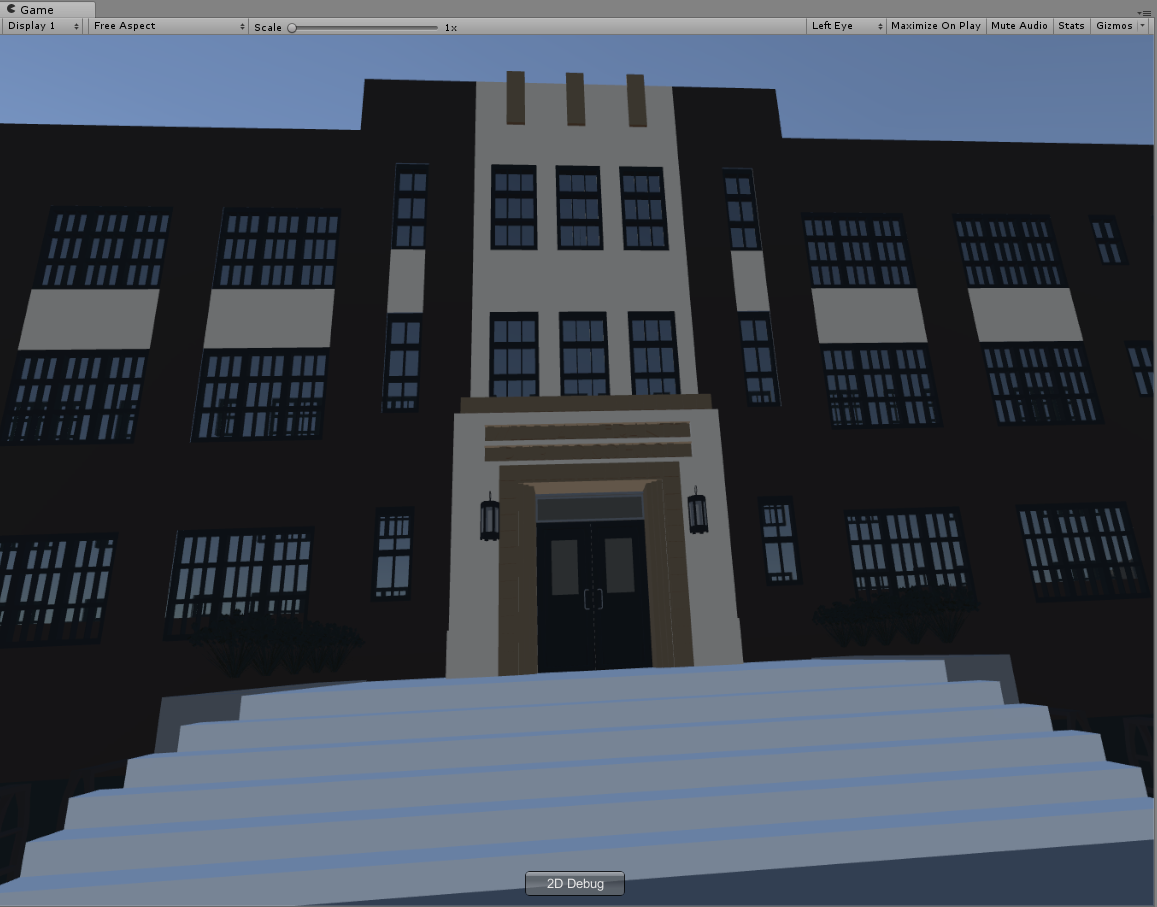

The second scene address proximity and scale, creating a scene where the user adopts the height of a six year old child and scaling the world around accordingly. Even to the point of exaggeration to experience that feeling for myself. It was suggested in a critique last semester that I create these small little experiences and go through them just to understand how they feel for my own knowledge, and I agree with this method - more experience would certainly help inform the final design decisions. I will again be experimenting with the composition and density of the mob outside of the school to create some of these experiences.

Week 1

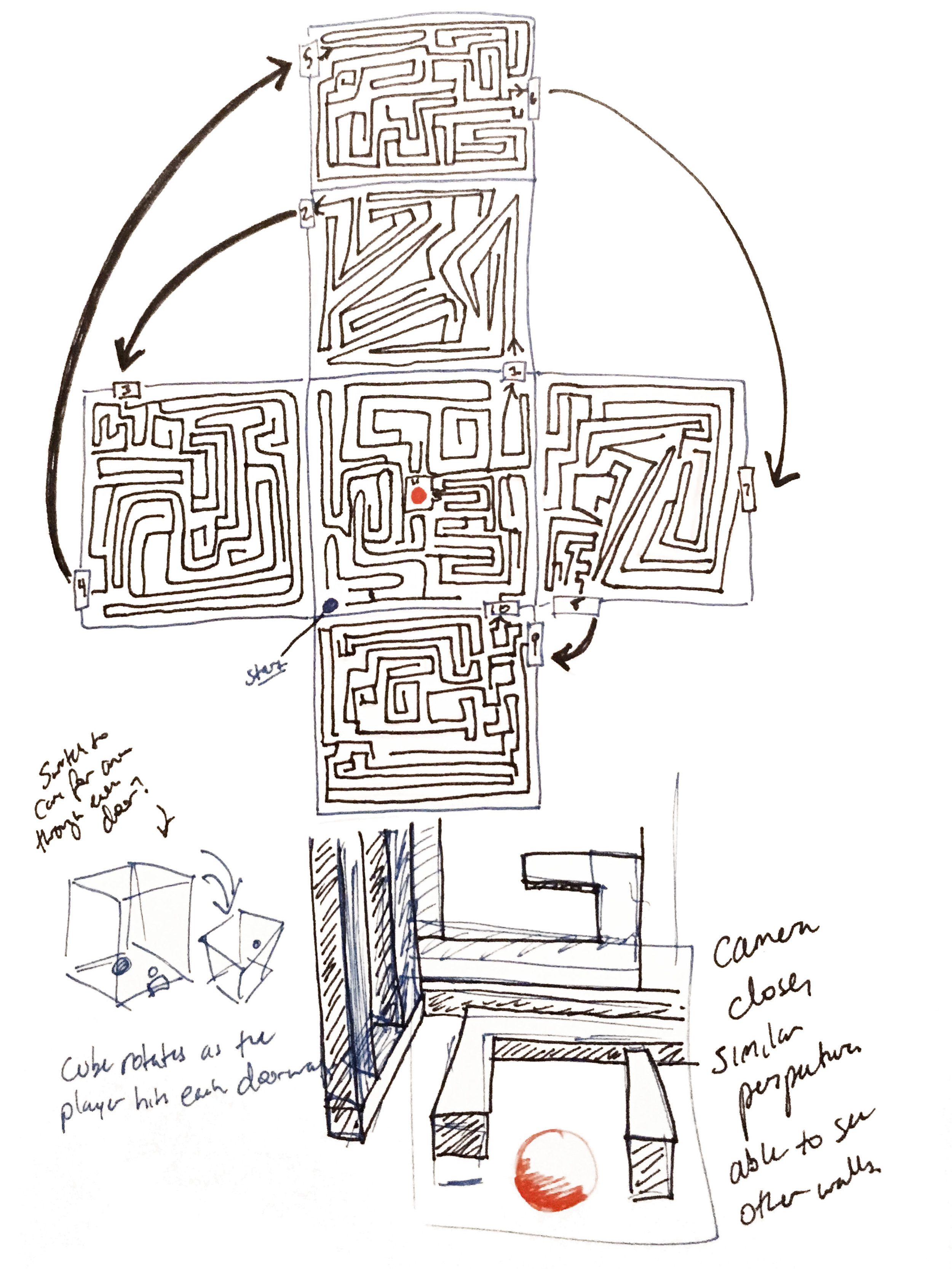

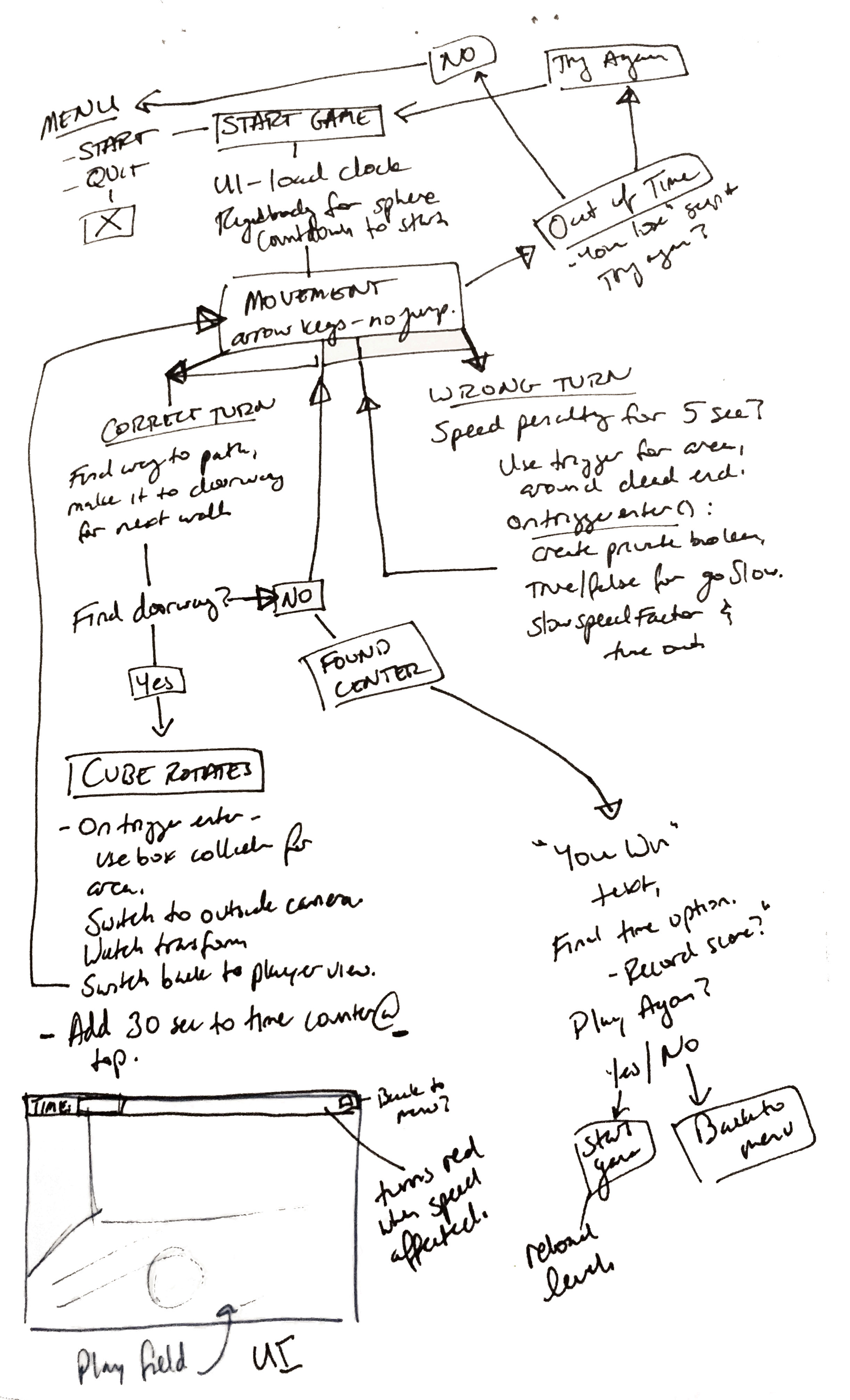

I purposefully scheduled Week 1 to focus on planning out the rest of the project and getting a strong foundation built. I planned out what I was going to do specifically in each scene and brainstormed various ways to solve technical issues. Writing my project proposal had already helped solidify these plans, but I’ve developed this back and forth process with my writing. My sketchbook helps me get general concepts and ideas going, where the proposal then puts formal language to these ideas. While writing the proposal I usually find a couple of other threads that I hadn’t considered, which brings me back to the sketchbook where I then update the proposal… the cycle continues, but it has been especially productive over the last two weeks.

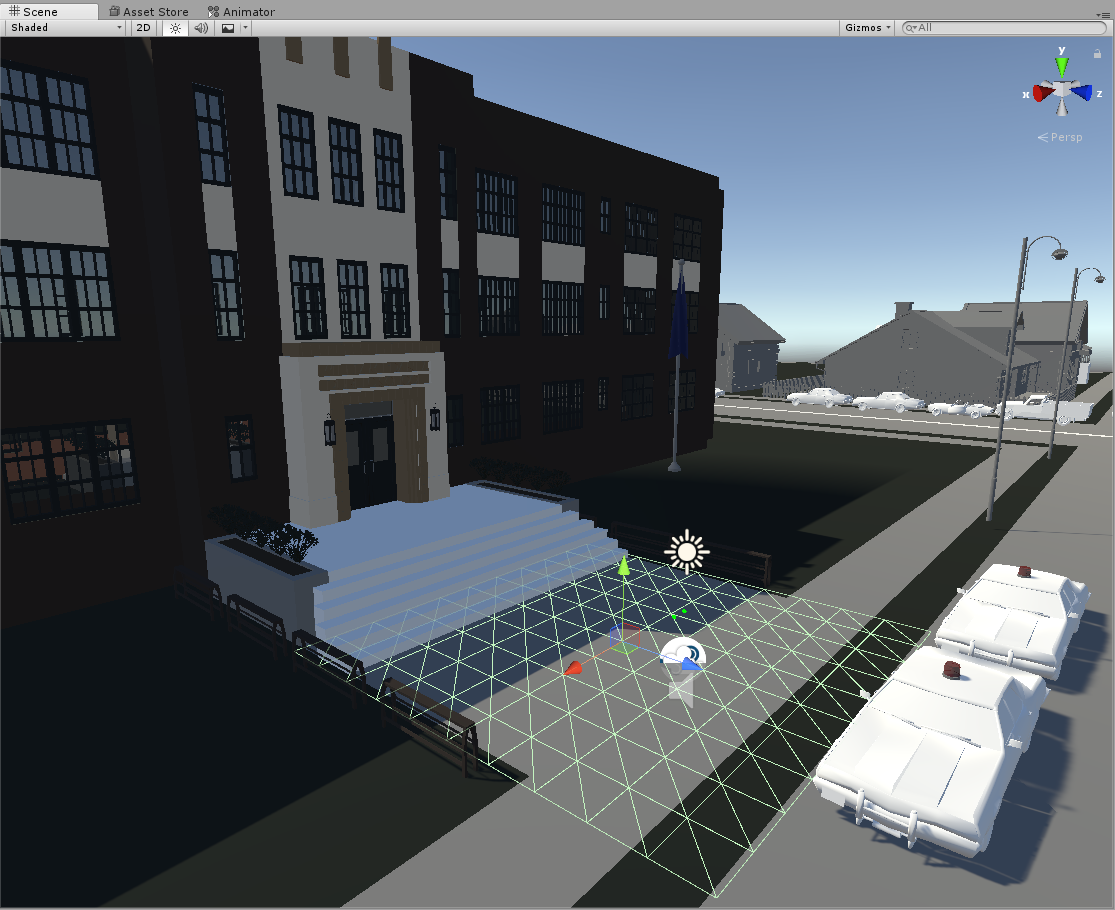

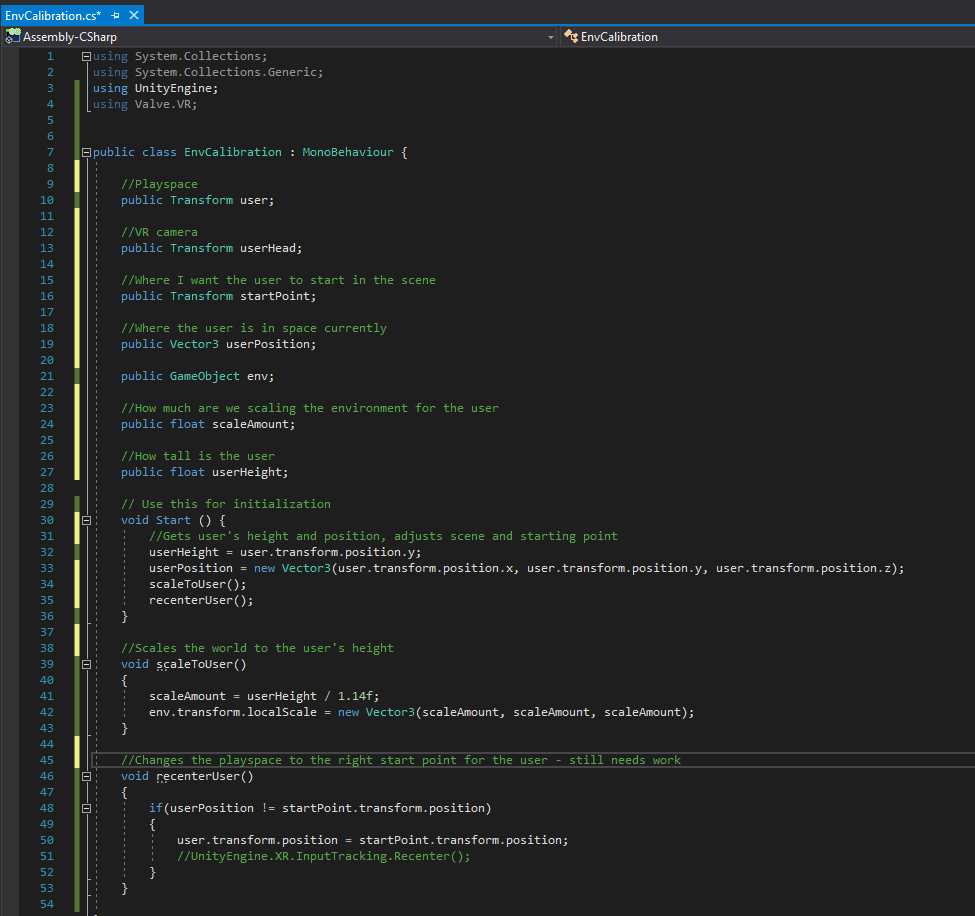

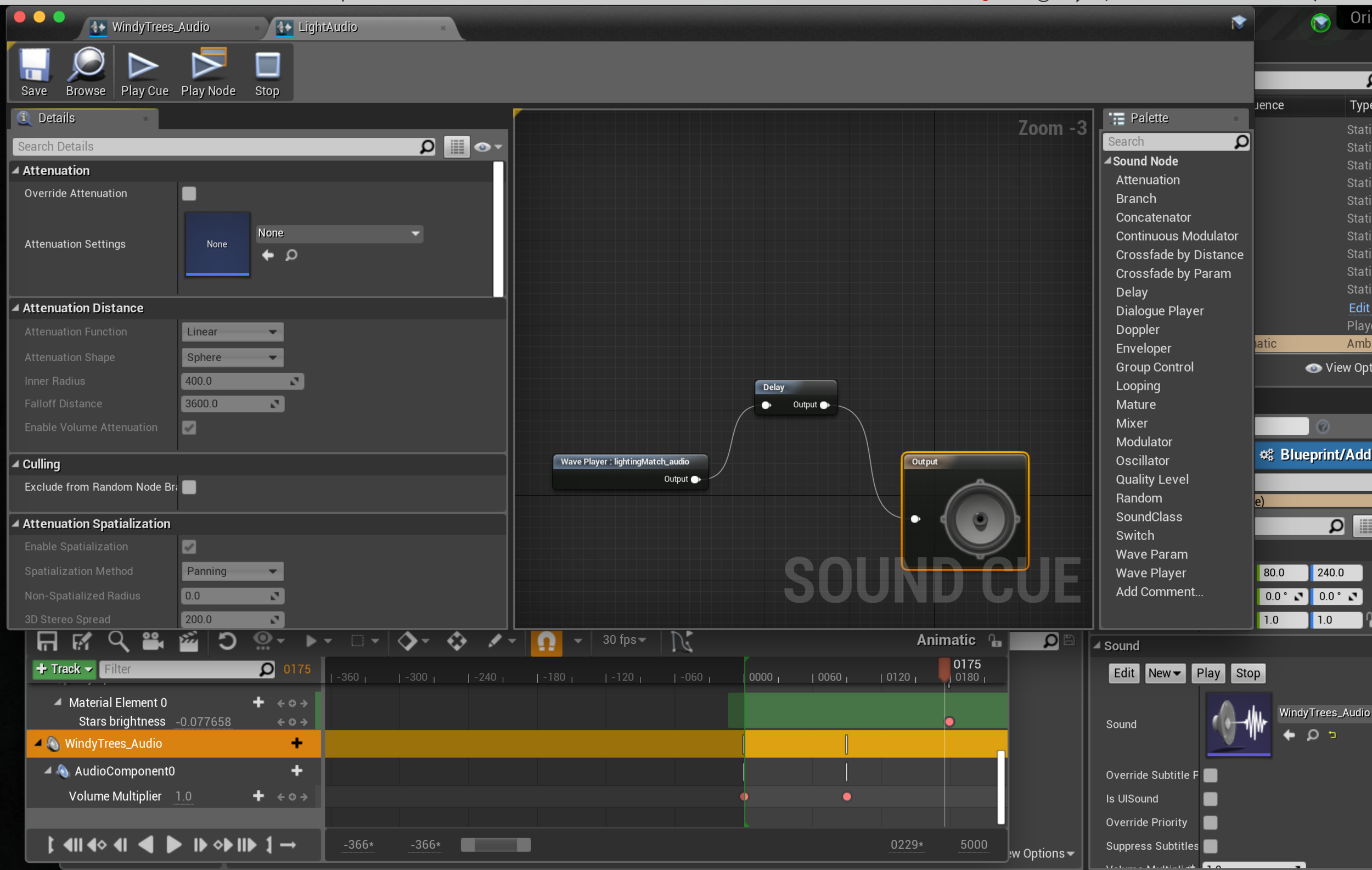

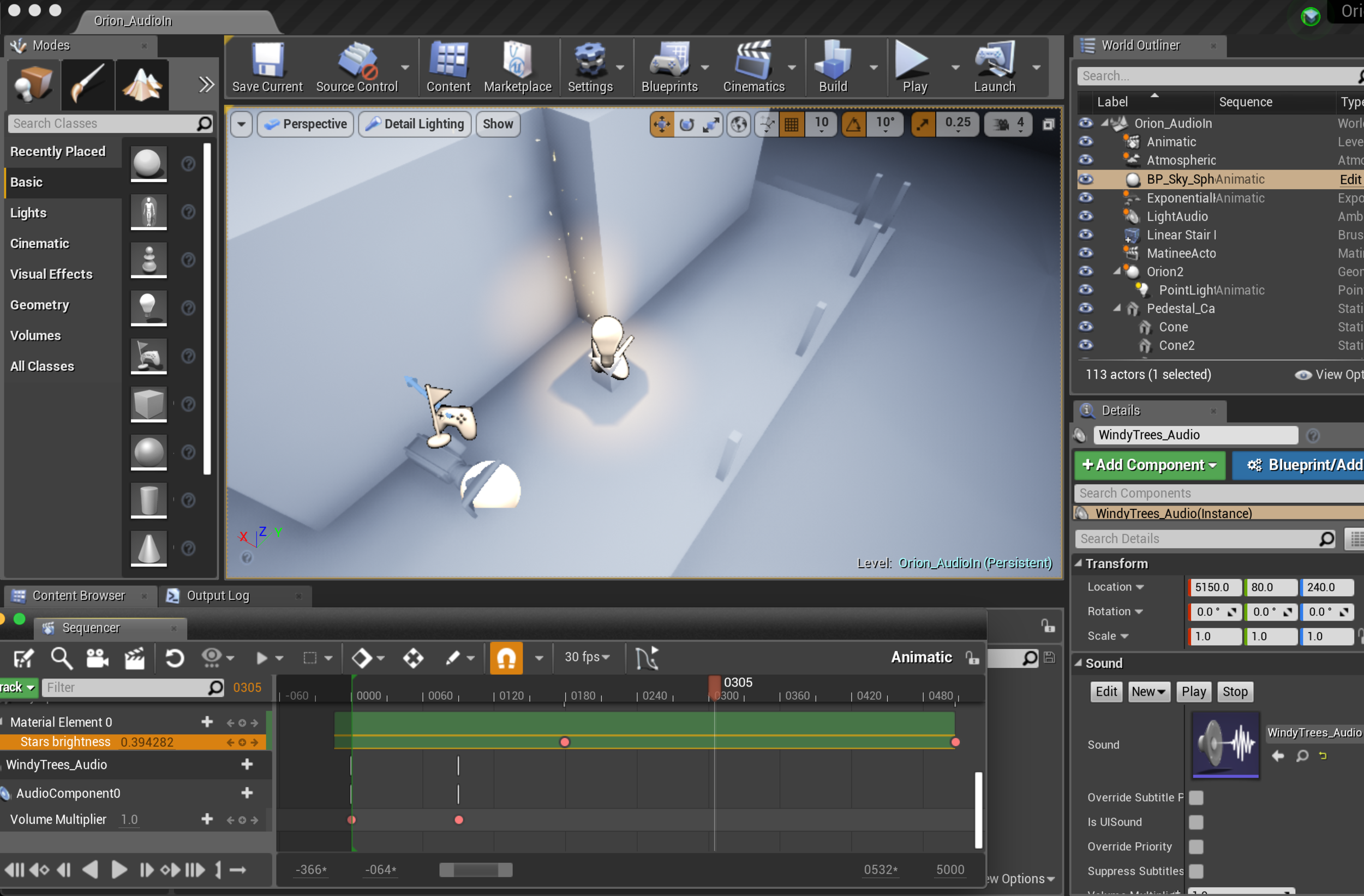

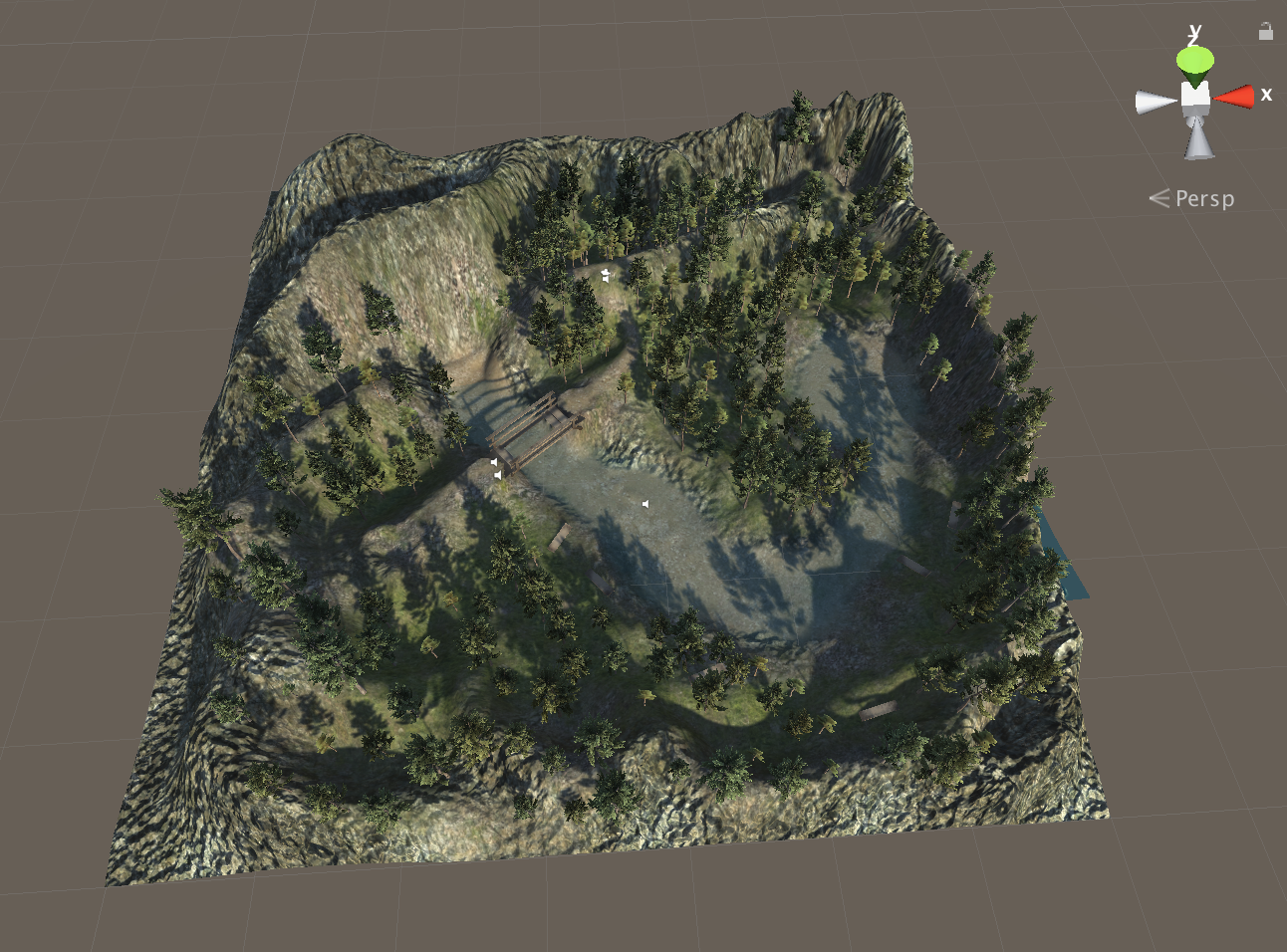

I focused on getting the overall environmental scaling and test space created this week using assets from our previous prototype. The issue was having the user start the experience in the right scale and position every time. Locking in the camera in VR is a pretty big “NO”, and Unity makes it especially difficult as the VR Camera overrides any attempts to manually shift it to its proper spot.

Scaling was much easier to figure out than I expected - I’m just scaling the entire set to account for the height of the user at any given point based on the height of a six year old (1.14 m) rather than forcing a user to be a height that physically doesn’t make sense to them. I expected this code to be much more difficult, but so far it seems to work pretty consistently when I test it at various heights.

I’m still working on getting the recentering function to work. I found a lot of old documentation from 2015 and 2016 that doesn’t account for all the changes in Unity and SteamVR. There’s some good concepts, and even a button press would be great for now. Still planning on continuously exploring this, and I expect I’ll be working on it throughout Phase 1.

NEXT

Begin Blink teleport testing through the scene.

When I made this schedule, I didn’t realize that SteamVR has a Teleport Point prefab. So, yay! Production time cut down! I’ll be using that spare time to add in primitives simulating the placement of the crowd and brainstorming potential gamification/timing. I may also go on a search for some audio and add that to the scene as part of my testing.

Experiment with button pressing versus gaze direction. How does the scene feel without controllers? Would gaze navigation be effective here?

Playtest #1 with peers, gaining feedback on the button or gaze mechanisms and other developments made during the week. Will also gain feedback on the scaling and positioning of the user.

OUTSIDE RESEARCH

The games I played this week were all very physically involved with a lot of motion required on the part of the player. However, none of these games used methods that required teleporting or “artificial motion” via joysticks or touchpads. All were based on the motion of the player’s body. Even more interesting, I experienced a strong sense of flow in these games than in past titles, though each for different reasons. Considering my thesis, which would not be this action oriented, it’s helpful to see how specific components in this games - sound, motion, repetition - are utilized in ways that ultimately make a flow state possible.

FLOW VIA SOUND: Beat Saber

Beat Saber is a VR rhythm game that operates as a standing experience, where players use their arms and lean to hit the cubes with sabers on the beat and in the indicated direction. Unlike the others, I’ve been playing this game for a few weeks and have had time to examine increase in skill level as well as what kind of experience I was having. It was initially very difficult to get used to the cubes flying directly at me and to be able to react to the arrows indicated on the cubes - a longer adjustment than I expected, actually. I play games like this on my phone using thumbs, and my body knew what it needed to do… but was having a difficult time getting my arms to react to it. After a couple of weeks I can now play the above song on Hard mode, which is what I’m including for this group of games.

Every time I play a song, I usually get to a point where I experience flow - able to react to the cubes as they come and follow the rhythm without really even thinking about it (and significantly better than if I am thinking about it). It’s a state that feels instinctual and occasionally feels as though time slows down, a common description of flow. Sound is what’s driving that experience, without the music this would be much more anxiety-inducing and stressful than enjoyable.

After playing I was thinking a lot about Csikszentmihalyi’s book Flow, where he outlines several important features in a flow activity: rules requiring learning of skills, goals, feedback, and the possibility of control. Even with varying definitions of what is considered a game, most require those components in one way or another. He references French psychological anthropologist Roger Caillois and his four classes of games in the world - based on those, Beat Saber is an agonistic game, one in which competition is the main feature. In this case the competition is against yourself to improve skills and others to move up in the leaderboards. However, as frequently as I did fall into flow, I also fell out of it easily when a level grew too difficult or beyond my skills.

FLOW VIA MOTION: SUPERHOT VR

I’m not quite sure how to categorize Superhot VR, but it’s the most physical game I’ve ever played in VR. Players can pick up items or use their fists to destroy enemies making their way towards you in changing environments… the twist is, time only moves if you move. Every time I rotate my head the enemies get a little closer, or if I reach out to pick up a weapon suddenly I have to dodge a projectile. As the number of enemies increased with each level I found myself kneeling, crouching, or dodging. There is no teleportation or motion beyond your own physical movement.

Everything here is reactionary. I experienced a strong level of flow, unlike the intermittent experience I tend to have in Beat Saber. Time being distorted here and used as a game mechanic almost seemed to echo those flow states. The stages are all different with minimal indication of what is coming next, and often the scene starts with enemies within reach. I didn’t have to think about what buttons or motions were required to move, it was a natural interface - I could just move my body to throw punches or duck behind walls. While this was effectively immersive and did result in a strong flow state, I was pulled out of it immediately every time I ran into a wall in my office or accidentally attacked an innocent stack of items sitting on my desk.

Sound was minimal, which I very much appreciated but sets this game in stark contrast to Beat Saber. The focus of this game is motion, not music or rhythm. On a continuous side note from the last two weeks, death states in Superhot VR were much less disruptive than the other games. The entire environment is white, so the fade to white and restarting of the menu isn’t very jarring or disruptive to the experience. It was easy to jump back into the level and begin again. This may be an interesting point for transitioning my thesis between scenes- having a fade or transition that is close to the environment rather than just doing the standard “fade to black”. I suppose it depends on the sequence I’m designing… a thought for next week.

Elven Assassin VR

And last, this is a game that combines a little bit of everything. Elven Assassin VR requires you to take the role of an archer fending off waves of orcs planning to invade your town. Your position is generally static with some ducking and leaning, and the ability to teleport to different vantage points within the scene. This deals in precision and speed, and the physical motion of firing the bow. The satisfaction of hitting a target in this game was immense, and I ended up playing until my arms hurt. The flow in this game comes from the rhythm of motion - every shot requires you to nock, draw, aim, and release the arrow to take down one enemy. There isn’t really a narrative occurring in this game at the moment. It tends to operate more like target practice, and the concentration required was what induced that flow state.

Falling out of flow was a little easier here with technical glitches - tracking on my controllers would get disrupted and my bow would fly across the world while I fell to a random orc sneaking through the town. Their use of a multiplayer function is also really interesting here, and the social aspect may be an interesting avenue to explore with this game.

Conclusions

I didn’t actually expect to talk about flow at all, it was just a happy side effect. These are three VERY different games and that experience of flow was the strongest commonality between them. This kind of goes back to game design as a whole rather than specifically VR design. But those little differences in how each game approached physical action and reaction to the environment really drove that point for me. Where Elven Assassin VR focused on action that was repetitive and chaotic, Beat Saber focused on the rhythm of those actions and applied them to the template of the song. Superhot VR left the chosen action up to you, but suggested some paths and required movement to occur in order to advance. The result was neither repetitive nor rhythmic, but required control.

I am not planning on making experiences so heavily focused on action and movement as these, but bringing what I’ve seen here from the choice in motion to smaller actions or interactions with the environments in my thesis work might help me answer some of the design questions I’m exploring in the Phase 1 project. How can a user move through a space? I’m considering teleporting from point to point, but have not yet thought about the potential secondary actions on behalf of the user - those spaces where gamification could occur. These games re-framed motion for me, reminding me to define more specifically the type of motion expected of the user, and ensure that the motion (or lack of) enhances the experience itself.