PROGRESS

Last week’s successful gaze development paid off. Scene 01 no longer requires the use of controllers!

Big achievement of the week was adding in all the gaze-based teleport points to the scene I constructed for demos 2 weeks ago. The actual script for applying a ray to the camera and teleporting to a point with a trigger didn’t require much modification. The trouble I had was deconstructing EVERYTHING that was attached to the original, time-based teleporting and attaching it to the new ones, then making sure to activate/deactivate the next and previous points to prevent the user from going backwards. I also added a light to each point that increases in intensity when starting at the teleport point as an indicator for myself and other players.

Me testing gaze teleporting in Scene 01. 04/19/19

After adding these features and testing myself, I found a few errors shown in the video above.

The range of the headset was set too short, which made it difficult to reach the next point. It prevents the user from triggering anything too far ahead of their position in the scene, but unfortunately it also prevents me from reaching some of the teleport points. I organized the mob variations before a visual of the next step was actually required to progress, but because the ray only reacts to objects in the Teleport layer, the user can look straight through the legs of the mob and still activate the next point without ever gaining direct visual contact. And the doors of the school are intended to be a trigger that ends the experience - instead, the user just keeps activating the teleport function and re-spawning directly in front of them. After addressing these issues (minus the points visible through the mob’s legs), I added a gaze-based “start” button at the main menu to create a completely controller-free experience and introduce this concept before actually entering the scene.

Script commenting for

For my own process, I realized that many of the scripts I’m writing/using will be useful to us moving forward in development and in various other projects. I took a quick break from scene development to add comments to the scripts for my own sanity and ease of understanding down the line. Just a new habit that I’m trying to develop, thanks to Matt’s Programming for Designer’s class.

We were fortunate enough to host a VR Columbus meeting on Friday evening at ACCAD and demo this new scene with gaze teleportation for the first time. Below is one of the recordings I took of a user moving through the experience from the main menu all the way to the end. Sound from the experience is included below.

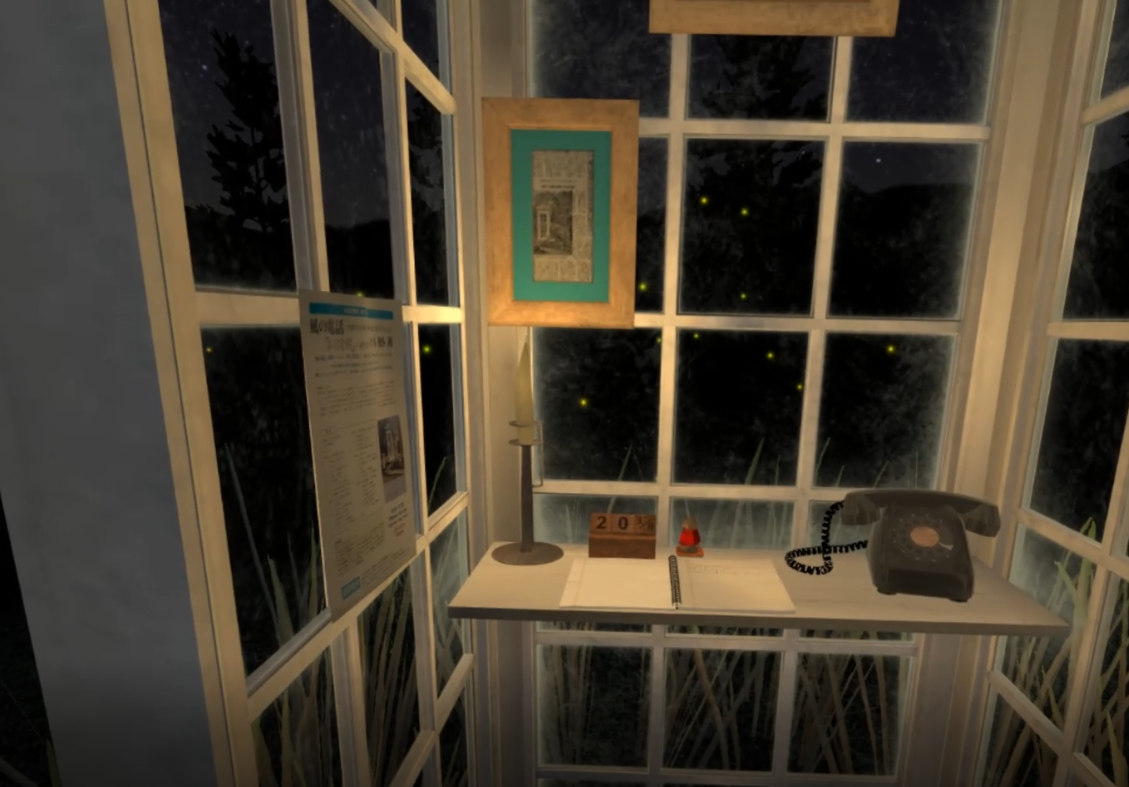

User playtest at ACCAD, 04/19/19.

While users were in the experience, I was watching the scene editor (as pictured above) to get an idea of where people were looking and changes that needed to be made for easier gaze detection. Blue lines in the scene above indicate where the ray is being cast from the user camera. When the line turns yellow, the user is making contact with the teleport point.

After watching a few users go through, I think I experienced a case of “designer blindness”, where after working on an experience for a certain amount of time you get so proficient at moving through it that you miss some potential user issues. I was really surprised at how much people tend to move their heads in VR! The teleport points require you to hit the collider for 3 seconds before activating, and for most people they would only manage to make it for two before their head twitched and the count restarted. From this, I imagine making the colliders larger would help. The further they are away, the more difficult it is to activate. Users would tell me “I’m looking right at it!” when really the ray was hitting the floor just below the trigger point. The light cue was somewhat helpful, but I think the user needs more than that to figure out where their gaze is actually falling. I think adding a light reticle to the camera will help this issue, and I’ll be testing it in the next week just to see how it feels. I’m concerned that the reticle is going to add a layer of separation between the user and the scene, reminding them again of the technology and breaking flow/immersion.

I know this is just a prototyped proof of concept, but the teleporting points are not especially obvious in the scene and their function is not clear from the start. Tori and I are still required to brief the user before the experience begins, reminding them to look around, to stand up, and that the points are even there. We’ve been planning on using Lucille Bridges’ character as a means of progression, walking ahead and calling our attention with audio cues, but even then I’m not sure how to transfer these attributes of “focus” and indication of action to a human figure. Or even if it’s required- maybe a glance at Lucille Bridges’ face is enough to move the user forward. This is a point of experimentation for Tori and myself beyond the current prototype.

What’s Next

Overall, I think this is a good foundation to build from. Now that I have an understanding of user action and progression, I feel I can start layering smaller interactions from the user and mob into the scene. This phase is due next week - I’ll save my thoughts on the summer for then. But in the immediate future, Phase 2 requires troubleshooting and adjustment of all the colliders in the scene. I will also be testing some reactions to proximity and gaze with one or two of the crowd members. Ideally, a user will look someone in the eyes and cause an insult to be hurled or an aggressive motion to occur. To aid in user gaze accuracy, I will add a reticle to the camera to see what that effect is like.

OUTSIDE RESEARCH

Research this week included three experiences on the Oculus Rift: Dreams of Dali, The Night Cafe, and Phone of the Wind. The descriptions in all three really drew me to them, as they’re meant to be contemplative experiences requiring you to navigate space and uncover the narrative (or lack there-of).

I started with Dreams of Dali. This experience was on display at The Dali Museum in St. Petersburg, FL for over two years, and explores Dali’s painting “Archaeological Reminiscence of Millet’s ‘Angelus’”. Looking at their page about this experience, I noticed that the experience is available in multiple formats for VR headsets and a “linear 360” view. This might be the first time I’ve seen that much variety available on a museum page. The VR experience was also covered by admission to the museum - nice of them, the ones I’ve done so far on-location have required additional ticket purchases.

“Archaeological Reminiscence of Millet’s ‘Angelus'“, Dali.

I had to laugh when the experience started up. The very first screen was instructions to stare at a glowing orb for 3 seconds to move around, with glowing orb included to begin the game. Interesting case study for the problems I was discussing in my Phase 2 project! This experience required me to move around large distances, and their inclusion of a reticle helped enormously. It only appeared when my gaze was near an orb, which left me free to explore the rest of the world without obstruction. On the actual experience, I was able to navigate in whatever order I pleased. Some orbs were only accessible from certain points, and at other points a new event was triggered. I moved out to the fringe of the desert on the other side of these structures and encountered elephants with the legs of an insect towering over me and making their way past. They continued to walk throughout the scene. Or I turned a corner and encountered a lobster sitting on top of a phone. Audio in the scene included soft rumblings or ambient effects, said to represent Dali’s potential thoughts in the scene. Few words were distinguishable to me but it really added to the dreamlike state of the place.

In the teleport actions themselves, the user actually slides through the space quickly to the given point. There’s no fade in/fade out or blink occurring. You’re able to see the ground moving below you and your destination. The only time this became an issue for me was when ascending or descending the long spiral stairs in the tower - I didn’t realize the next orb would just throw me directly up to the top. Not too dissimilar from when Saruman propels Gandalf up to the top of Isengard in Lord of the Rings: Two Towers.

Anyways. I feel that context is important in this experience. Had I been visiting the museum I would have probably had more of an appreciation for the things included in the experience. I have a very base knowledge from taking Art History in early college, but my understanding of Dali doesn’t go much further than that. As a user at home, that additional information must be sought out independently from the experience itself. I also wonder if the “linear 360 experience” is crafted to form a particular narrative or just a path that covers all of the points. I didn’t have time to go into that this time around, but I’d like to make a closer comparison in the future.

I moved on to The Night Cafe: A VR Tribute to Van Gogh, made by Borrowed Light Studios.

I’m going to have to revisit this experience, as the only way to navigate was using a console controller. Kind of odd to make that the only source of input, but until I can get that set up I’ll just give my static impressions of the first scene. The assets and animations are very beautiful, and the style of the room definitely matches. In the spaces where they had to guess at detail, such as the wall and door behind me, the makers said they took reference from other paintings and were able to match his style pretty well. The intro leading up to this sequence was an image of The Night Cafe painting before fading into the actual scene.

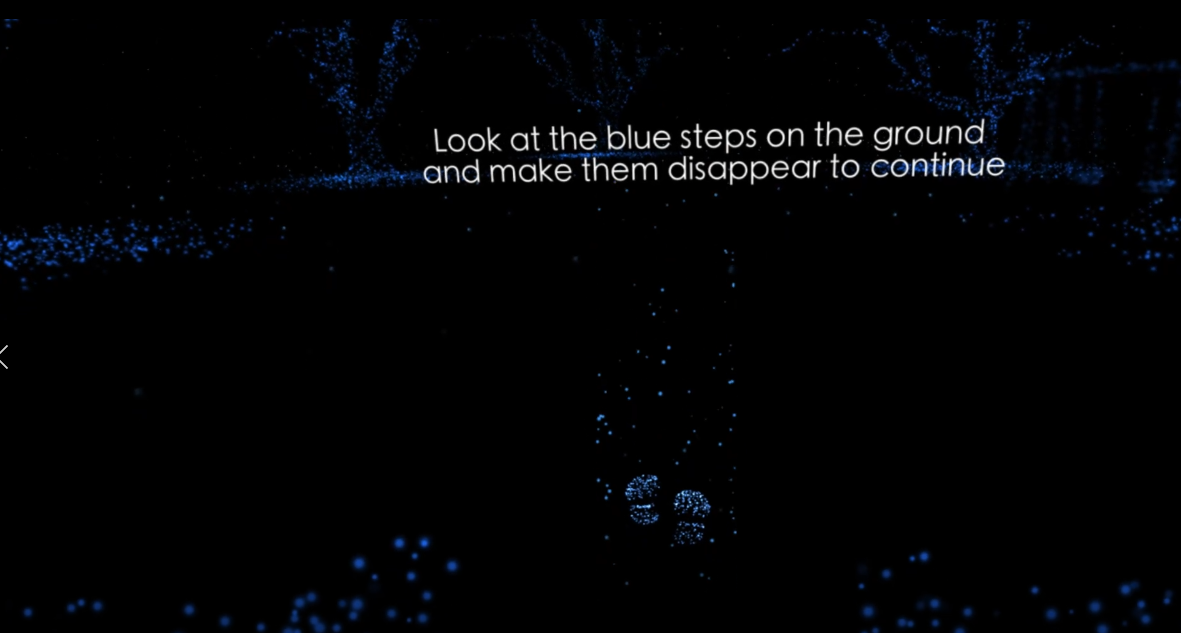

The last experience was Phone of the Wind, an interactive film based on a phone booth in Japan used to connect and speak to departed loved ones.

This phone booth is well documented, actually sitting in the town of Otsuchi, Japan, and built as a way for people to grieve and heal after the 2011 earthquake and tsunami. In the experience, you listen to three people talking to their loved ones in the booth. I was really surprised by the types of visual content included; users begin the experience from the perspective of a drone actually flying over the booth. As each story begins, the world transforms into an animated scene representing what is being said. At the beginning and end of the experience, the world is made of 3D assets. I’m not sure the transition was smooth due to them being full world transformations, but it definitely added variety and personality to each story.

The interactive aspect of this film comes in at the very end. The user is given the option to enter the booth themselves and leave a message for a loved one. I really love that this was part of the experience, and I can see some similarities between this and Where Thoughts Go. Users can choose to skip though and move on or to take a moment to privately reflect. The few instances of movement in here with the flying drone or the user entering the telephone box is forced, there is little control over your location in the scene.

It was difficult to find any information about this experience beyond what’s given on the Oculus store - the developer’s website is now private. But snooping around the reviews was… its own experience. Some users loved it and were crying, others thought it was stupid and shouldn’t be allowed on the store due to it not being “fun”. From their comments I gather that many, like me, had no idea this was a real place with its own history and meaning to a community, not just a filmmaker’s idea. While I don’t think that information is necessary for the purpose of the experience, I wonder why this information isn’t more readily given and attached to real life events. Knowing the history helped ground the story for me.

CONCLUSIONS

These were very different interpretations of real objects or places. I think seeing how some of the gaps in information were filled in with reference, though for the two I was able to fully experience I think the outside context and experience was not fully filled in for the user. I feel that I needed that additional information to truly enjoy and understand the content to its fullest extent. I think designers are taking these experiences that were initially in exhibitions and putting them on the Oculus or Steam stores, but not accounting for that missing information and how that experience outside of the headset is part of the overall design process. These outside research experiences this week have really made these points clear to me, and have been helpful in clarifying my thoughts about how to organize the content outside of VR in my framework.