Reaching the end of my Phase 1 investigations led to the reiteration of one very powerful concept: context is key.

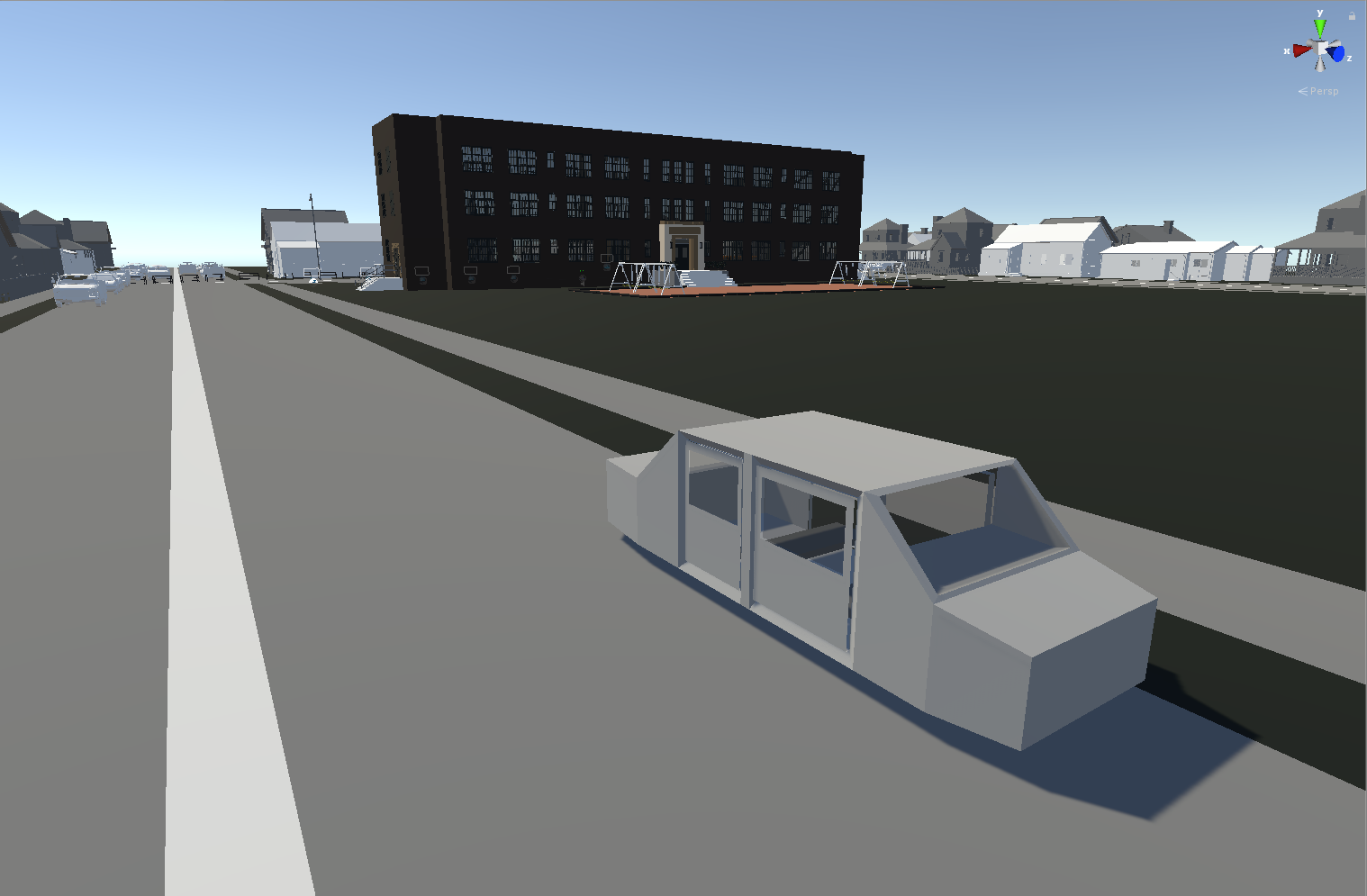

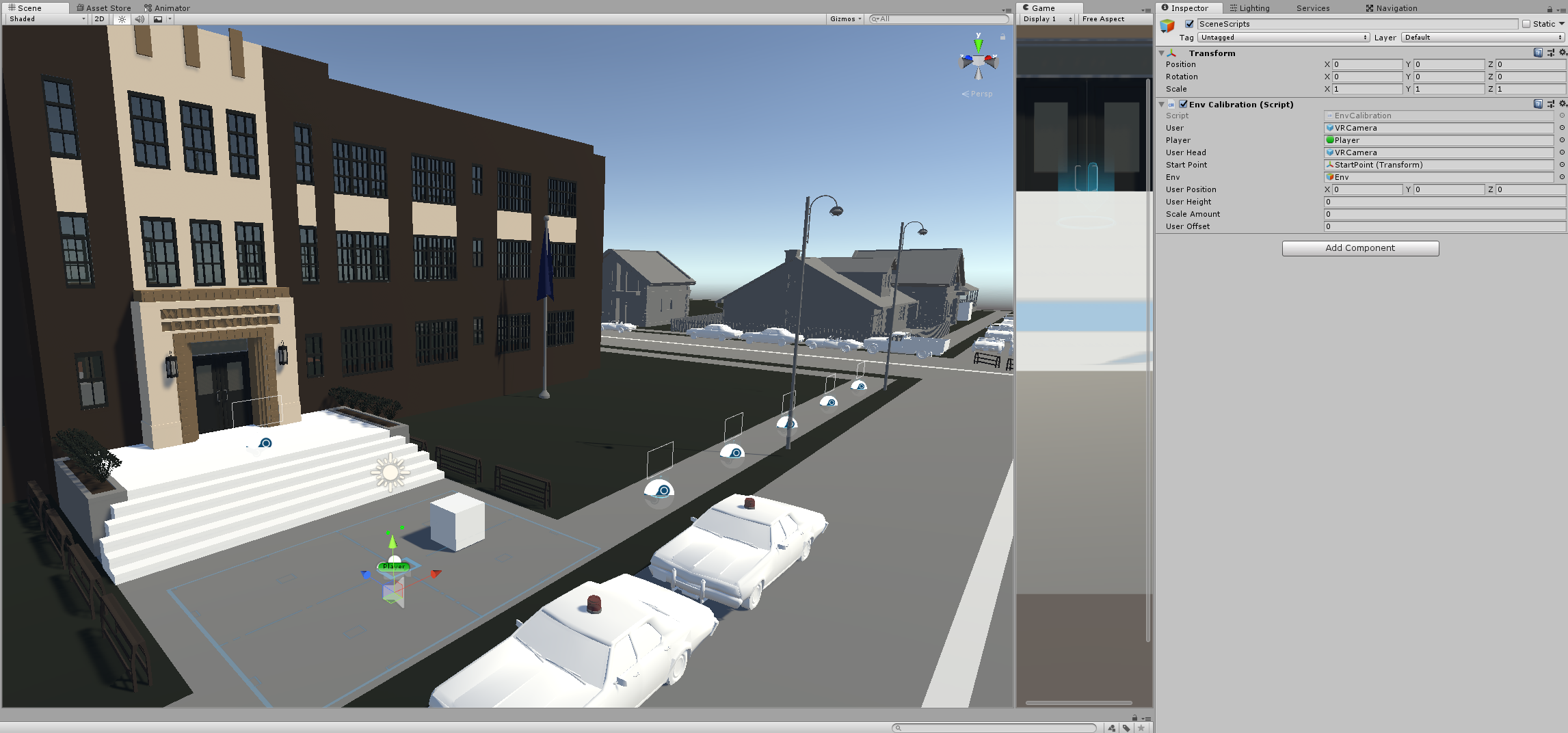

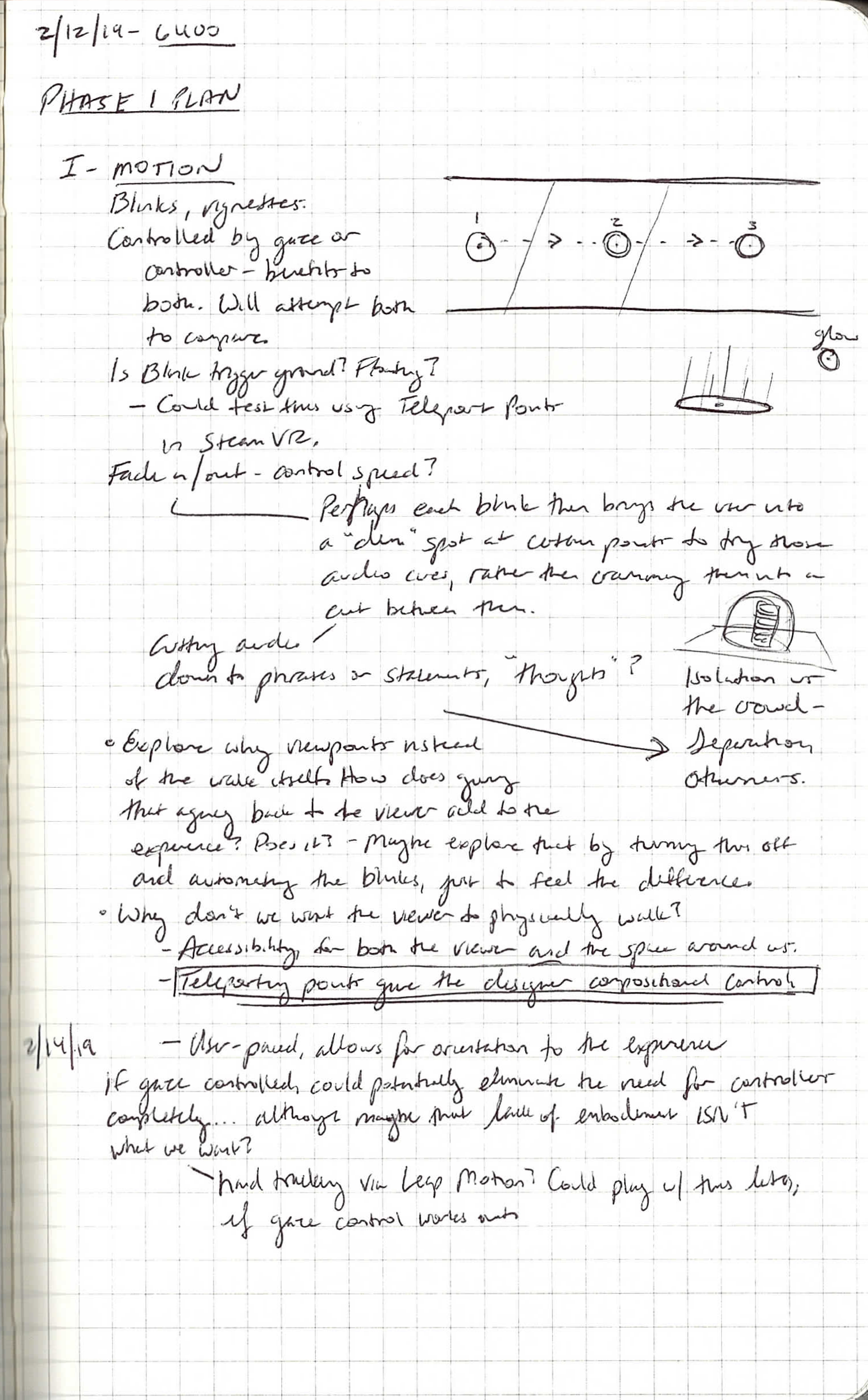

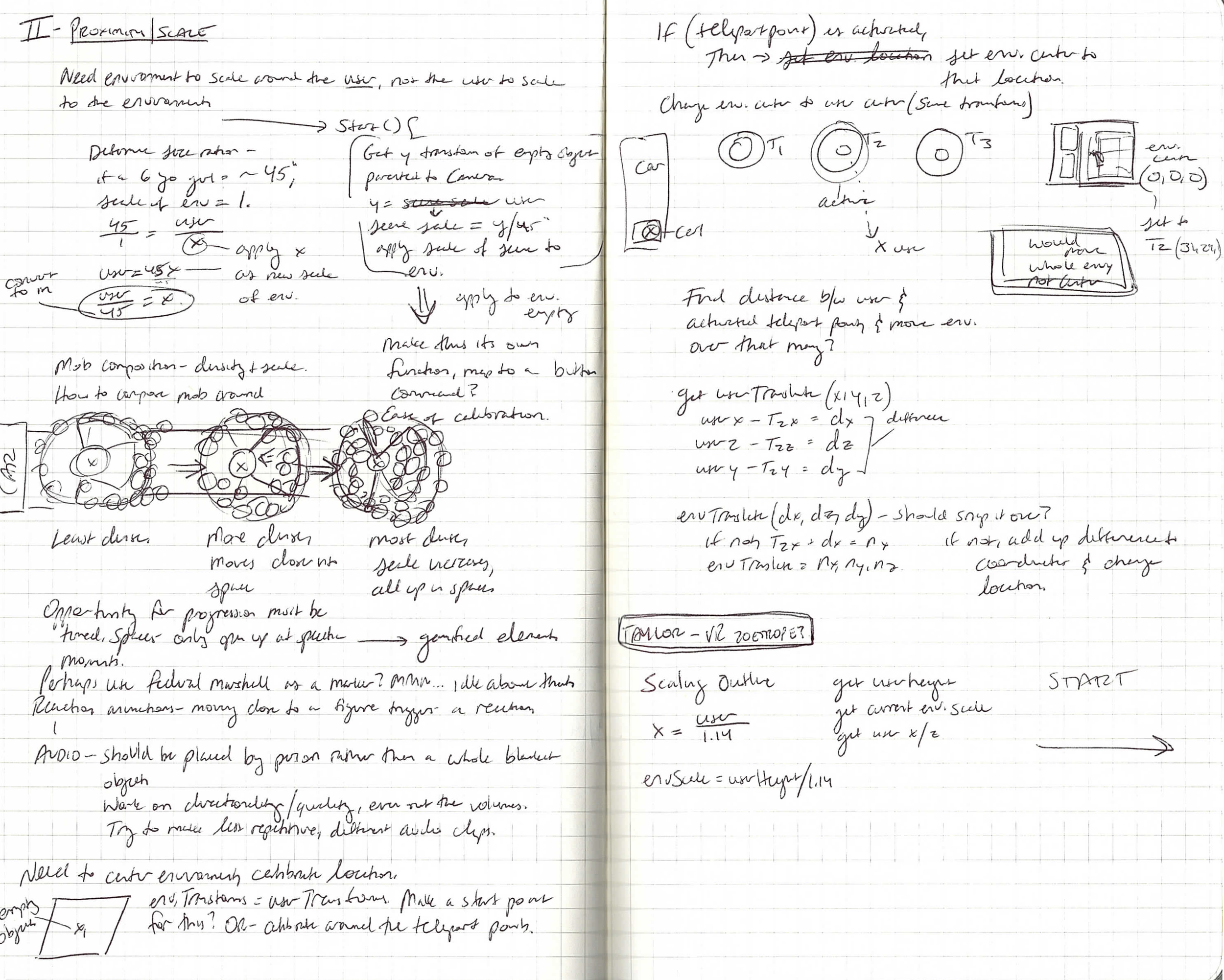

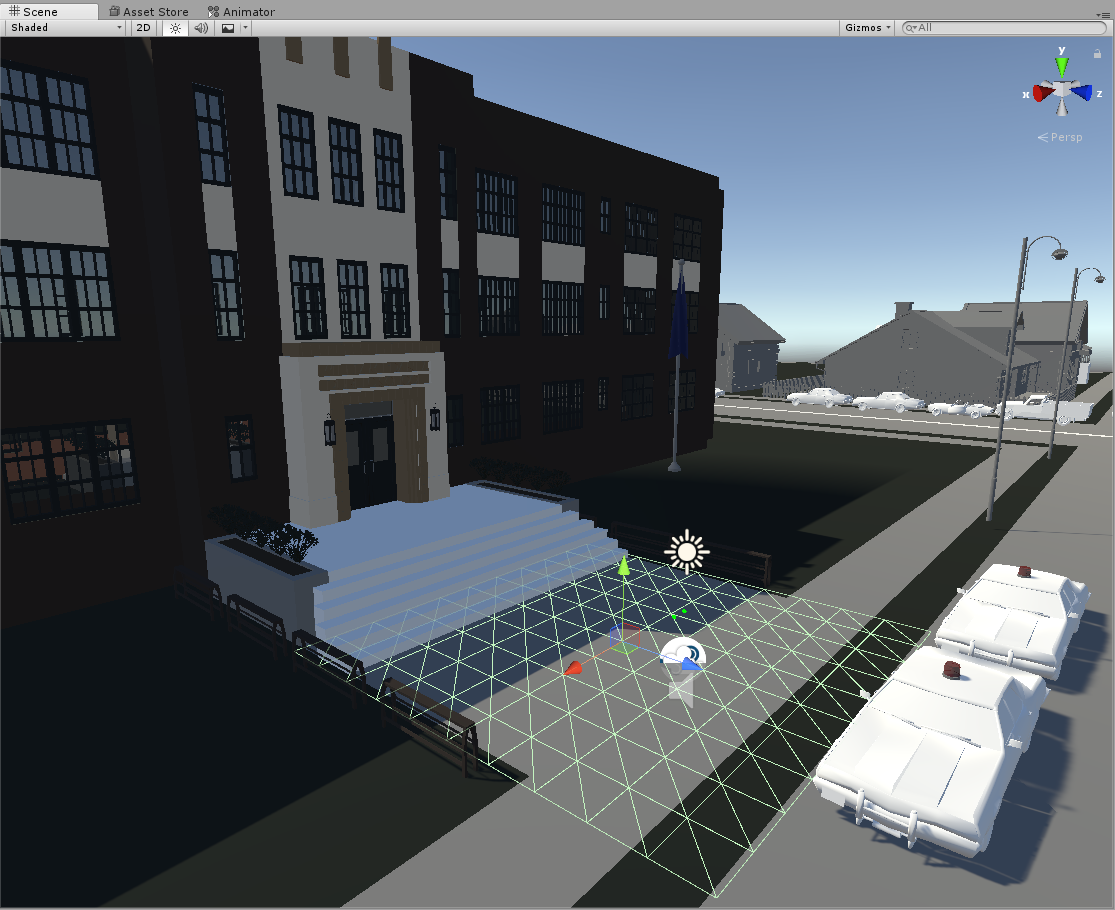

My work over the last five weeks has been investigating how designers move a user in VR, specifically with user agency and how designers can direct the users down a particular path. Within this particular scene, that path was a long sidewalk that takes quite some time to traverse. I experimented with teleporting using the prefabs available in SteamVR, with scale and location of the user in VR, and playing with the transitions between different kinds of motion - from moving car to teleport points to a plane.

Even though I was not able to achieve everything I outlined in my initial schedule, everything was functional and pretty neat in the scene.

However, feedback that I received after demonstrating the scene and then going through it myself was that teleporting actually takes the user out of the experience. The appearance of the teleport points is unnatural in this space that I am trying to create, and using a controller itself is arguably a hazard to immersion. It brings the user’s thought back around to what they’re doing instead of what the people around them are doing. I’m incredibly grateful for that insight - I hadn’t thought of it from that perspective before, but having made the scene I have to agree.

I was really lucky to get to show this scene to Shadrick Addy, a designer and MFA student who worked on the I Am A Man VR experience, who sat down with Tori and I for an hour to discuss our work on this project. He offered much of the same critique about the teleporting, pointing out that in context it doesn’t make sense. Masking this motion with something that fits in context with the story would be much more effective - for example, using gaze detection to trigger the movement forward. A mother urging a daughter forward might look back, gesture, or verbally ask her to keep moving. In this, we could build a mechanic where the user looking at the mother after these triggers would generate their motion forward in the scene and along the narrative.

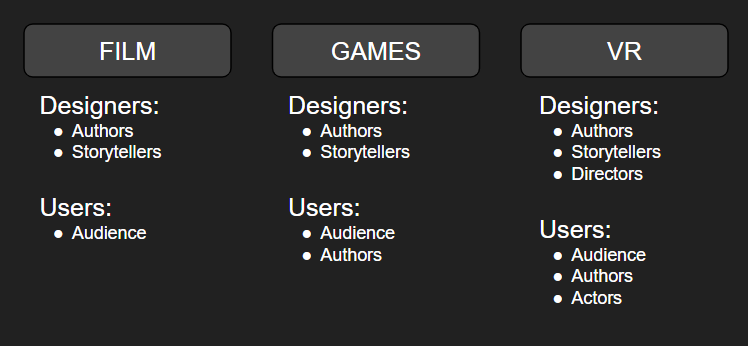

Using this gaze detection would have the benefit of eliminating controllers completely, something I discussed previously but didn’t fully understand the benefit of until having these conversations. In discussing the immersion this can bring, I asked him about a common structure I’ve seen in VR experiences so far - this sandwiching of VR between two informational sessions. Our project does this with an introductory Prologue as well, but my question was whether to add information in the experience as it progresses or leaving it alone. He suggested that the addition of information would only serve as a distraction from the scene itself, a distraction that might prevent the emotional reaction and/or conversation that Tori and I are attempting to create. There’s some really interesting layers there: in how the main scene is encased in narrative, how the prologue and “epilogue” scenes frame the experience, how the use of VR itself is encased within a system that provides context for the technology, and that space is designated and placed within an exhibit discussing larger themes - all informing each other.

Coming back from the tangent, Phase 1 helped answer my question as far as the type of motion I should be considering within this space and why. I was able to work out some of the smaller technical bugs that will go a long way in the long run. And I was able to spend a lot of time doing some outside research on VR experiences to help understand the decisions currently being made in other projects.

What’s Next

Phase 2 naturally follows Phase 1, and I think here the best option would be to build up. I learned a lot from the last few weeks and I would really love to develop some gaze control mechanics. Being able to move forward in the space powered by gaze in a crowd and testing this crowd reactions that I didn’t get to in Phase 1 would go a long way towards development this summer and in the fall. I’ll be articulating this plan a little better next weekend after I’ve written this proposal and work has begun. I will also be recording Phase 1 for documentation and uploading that to my MFA page for documentation.

OUTSIDE RESEARCH

Spring Break happened since my last post and I took advantage of the time.

Museum: Rosa Parks VR

Over break I found myself at the Underground Railroad Freedom Center in Cincinnati, OH, where they’re currently hosting a Rosa Parks VR exhibit.

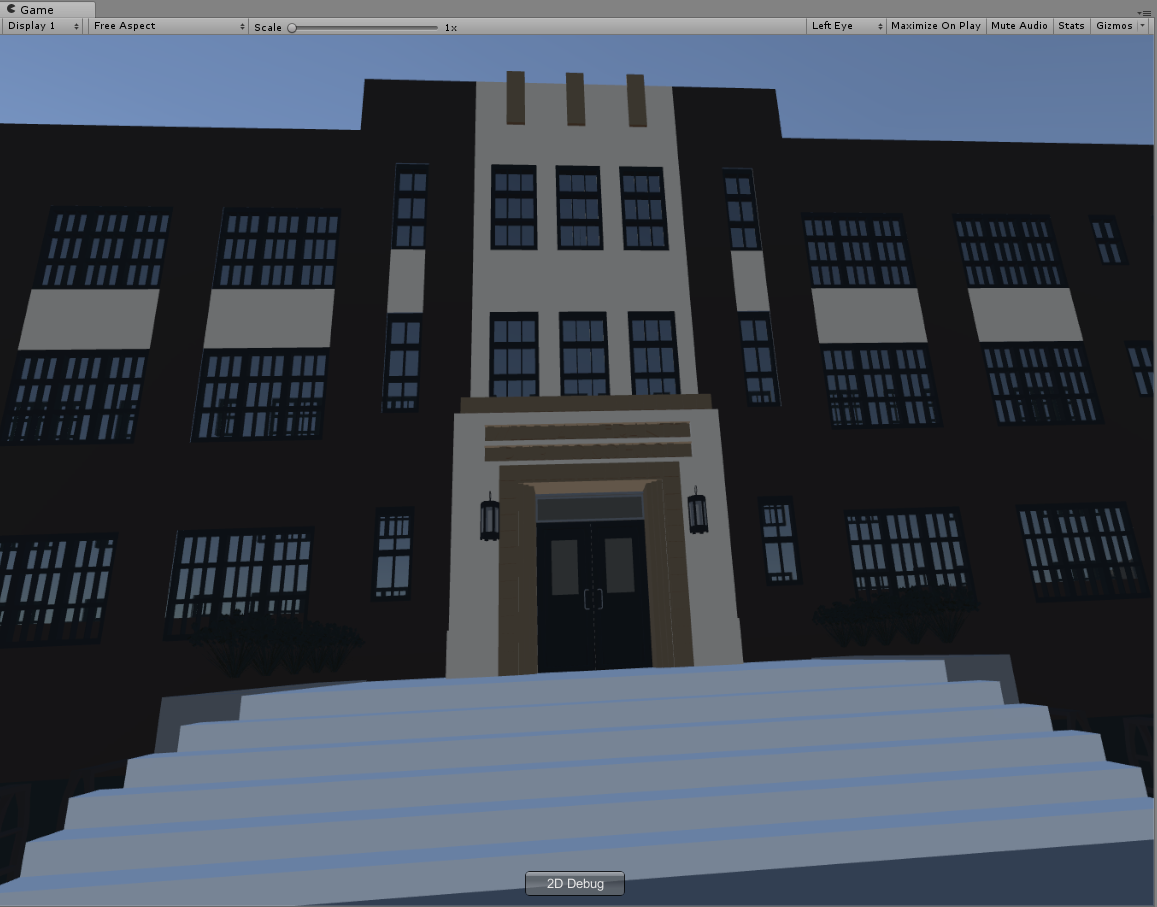

I was really interested in how this experience was going to be placed in the Freedom Center, and what the VR content was going to consist of. This was my first time using VR in a public space, plus I came in not knowing much about the experience itself. I tried not to watch videos or read articles - although looking for the video above I realized it’s incredibly difficult to find any information about it. Up on the 3rd floor of the Freedom Center in a corner to the right are four seats from a school bus on a low platform, and a table for the center attendant to take tickets/give information. The experience was made in Unity, uses Samsung Galaxy 9s and mobile headsets (which were cleaned after every person), and headphones.

Full disclosure, my headset had something VERY wrong with the lens spacing and I ended up watching the experience with one eye closed.

I saw the same sandwich structure here as what Tori and I are using - an introductory sequence, an uninterrupted 360 video experience of the user being confronted by first the bus driver and then a police officer, and then an exit sequence discussing historical ramifications and present day context, all narrated by a voice actor speaking as Rosa. Having the users sit on the bus seats was a really nice haptic touch that I enjoyed - that weird texture and smell just can’t be faked. The user is embodied in the experience, being able to look down and see what she was wearing on that day. Each time slot is 4 people for group every 5-10 minutes, and in our time waiting I saw people of all ages coming over to go through the experience. In order to start, the user has to look directly into the eyes of an image of Rosa Parks for a certain length of time.

I thought that the embodiment was a really effective choice for a static seated experience that requires little to no active participation. The user is reminded by the attendant at the beginning that they can look around in all directions. I was most surprised by how it was situated in the center. Fortunately Rosa Parks is a pretty well known figure in history, but if you didn’t know anything about her there was nothing to inform you in the surrounding area. The informational segments in the experience spoke mostly of what was happening in that time period. Being a standalone attraction sparks curiosity in wanting to know about the experience while being part of a larger exhibition may give greater context in the long run… so I suppose it depends on your goals for the user where this is placed. I think I personally would have liked more information to surround the experience, especially considering how complex the other two exhibits on the floor were.

I think I would need to do this experience again to examine how I felt coming out of it. I wasn’t especially affected - more distracted by the odd 360 video editing happening in the middle to try to increase depth and the funky lens adjustment in my headset - but I did appreciate the nature of the experience itself and its placement in the center. And I was able to find parallels between their development and what Tori and I are working on.

Museum: Jurassic Flight

This was the other VR experience I got to do inside of a museum. We discovered it completely on accident in the Museum of Natural History and Science in the Cincinnati Museum Center.

Me flying as a pterodactyl in Jurassic Flight. Skip to 0:19 for the actual experience start.

After Rosa Parks VR, this was as opposite of a VR experience as I could manage. Jurassic Flight makes use of equipment called Birdly, which I last saw in a video of its prototyping stage. The experience requires you to lie on your stomach on this device, arms out to the side, Vive Pro strapped to your head. You take flight as a pterodactyl, soaring above trees, rivers, and mountains, observing the other dinosaurs living their lives. There is no goal here, no informational aspect to the experience. It’s all about the haptic feedback. There’s a fan at the front of the device that increases and decreases with the user’s air speed, the device tilts forward and backward based on your pitch in the game, and you control direction with the paddles at the end of the “wings” of the device.

The experience is situated just to the right of a big dinosaur exhibit, which provided plenty of context before actually going into it. It’s not particularly thought provoking or educational but it does add on to the content already addressed in the museum from a fun perspective. It’s about 5 minutes, very scenic and peaceful (minus the initial few seconds of motion sickness during a dive), and it was made in Unreal so the environment and lighting was really stunning.

Again, not really able to make a connection here (pre)historically or in structure of the experience, but I was really fascinated by the haptic feedback that occurred and that novel flying experience.

Anne Frank House VR

I found this experience on the Oculus Store and had to give it a go. Unlike the past two, I went through this experience at home on my own machines. I read Anne Frank’s Diary in elementary school, though most of the details escaped me as an adult. This experience recreated the Frank’s annex while they were in hiding from the Nazis.

Again I found the informational sandwich structure. The user is offered the option to go through a story mode or a tour mode through the annex - I chose the story mode. This begins with a fairly long narrated introduction with historical images, followed by the exploration of the annex. The user requires very little interaction beyond pointing and clicking to the next point. Once there, narration begins, and we hear Anne Frank telling us about her daily life in each of these spaces. It’s a linear path through the space and the only interaction is really moving from point to point.

It’s beautifully recreated. The quality of the environment really called me to examine it closely. I wanted to see the pictures on the walls, the books scattered over the bed, what crossword questions were in the paper. Each progression revealed a little more about the family and what their everyday life was like. Hearing these stories in contrast with the empty spaces the user explores creates a wistful mood. I didn’t want to make any noise myself due to the emptiness and hearing about how the family had to remain quiet during the day to avoid rousing suspicion.

The whole tour took me around 20 minutes, and I felt like I really did learn a lot just from seeing the space and hearing fragments about life related to each segment of the house. The choice of motion seems to come from the fact that this seems to be an experience made for mobile and thus requires a controller of some kind. Beyond the point of the cursor, I never see the controller itself. It seems like a good compromise that doesn’t threaten the immersion in the experience.

Traveling While Black

I’ve been meaning to do this experience for the last three months. Now that I have, I can almost guarantee I’m going to need to do it again.

Traveling While Black addresses the issues faced traveling across the country in the past by black Americans, starting in the 1940s and ending in 2014. The interactions and interviews all take place in Ben’s Chili Bowl, serving as a hub and safe space throughout the course of the experience. Every interview occurs in a booth, with the user switching locations from one seat to another with each new person. The visuals themselves are beautifully executed and edited, running strong parallels between past and present. At the very end of the experience, the user sits across the table from Samaria Rice as she speaks about the day her 12 year old son was shot by police in Cleveland, OH.

There was no embodiment, no interaction - the user is watching and listening throughout the whole experience. Placing the user in an intimate setting like a diner booth and in close proximity to those being interviewed allows the user to feel like part of the scene. It’s a 360 video that’s about 20 minutes long, ending with the point that safety is still not guaranteed for black Americans.

I came out of the experience strongly emotional and had to sit for awhile to really absorb everything. Truthfully, I’m going to need to do the experience again to actually analyze the structure and think about the decisions being made and how 360 decisions may differ from animated VR. But I do know that this is the kind of effect I want to have on the viewers of our project. And I wonder if anything stylized, not film, could create that level of personally jarring human connection.

Conclusions

Having gone through a few historical VR experiences now, I’m seeing this sandwich pattern of information more and mode. And I think I understand for the most part why this is occurring. I’m also seeing how multiple narratives are being organized cohesively as well as how one narrative can be distilled to give a whole picture without saturating the user with information. I’m going to continue with this next week and see what other kinds of narrative thought-provoking things I can find, as well as any other museum VR exhibits existing - of any kind - that I might go and explore.